Updated: 1 December, 2025

20 September, 2025

Application security (AppSec) remains one of the toughest challenges modern organizations are facing. Despite heavy investments and the adoption of frameworks like OWASP SAMM, OWASP DSOMM, and BSIMM, many companies struggle to make meaningful progress. Teams keep on patching and mitigating vulnerabilities, yet the backlog keeps growing. But why is that? We have massive knowledge basis, AI-powered tools, and widespread training programs. What’s going wrong? Are we making some small mistakes here and there or are we focusing on the wrong things entirely?

Over the past three years, I’ve worked alongside several OWASP SAMM core team members to conduct structured evaluations across several organizations. We have seen the same critical issues across most of them.

Most mistakes boil down to two root causes. First, tools alone do not solve problems, they create some. Their purpose is to support people and processes, not replace them. Second, teams often treat security activities as checkbox exercises, i.e., compliance. I am going to say it loud and clear here: compliance is the enemy of security unless you have security first.

In this blog, I will walk you through the most common AppSec failures we have observed. I will also share clear and actionable guidance on what good looks like.

Assessment methodology

Together with several other OWASP SAMM core team members I have conducted over 40 application security assessments. We have used the OWASP SAMM methodology, however that is largely irrelevant for the rest of my story. Each assessment followed a structured format. We typically scheduled five remote sessions each lasting about 90 minutes. For every session we would invite key stakeholders relevant for the topic from the team we were assessing. On our side, we would always work with two assessors. One of the assessors would lead the discussion, while the second one took detailed notes.

Here is a breakdown of each session topics and the typical stakeholder role we would invite.

|

Session Focus |

Typical Stakeholders |

|---|---|

|

Strategy, Metrics, Compliance, and Education |

Governance leads, security champions |

|

Application Risk, Threat Modeling, Architecture |

Application architects, senior developers, engineering managers |

|

Security Requirements, Security Testing |

Business analysts, application architects, senior developers, PMs |

|

Secure Build, Secure Deployment, Defect Management |

DevOps engineers, senior developers |

|

Incident Management, Environment Management, Operational Management |

DevOps and infrastructure engineers, incident response teams |

After the sessions, each assessor scored the team or organization independently. We then consolidated our findings and produced a final consensus-based assessment report.

In the next sections, I will first present the quantitative results from these evaluations. I will also share the qualitative insights that highlight the most critical application security failures we observed across organizations.

Quantitative Findings

The quantitative results from our assessments are available through the OWASP SAMM Benchmark Project. While the dataset doesn’t include every organization we assessed (some chose not to share their results) I believe the published data still provides a reasonably representative sample.

However these numbers on their own don’t say much. They’re not actionable. You can’t draw meaningful lessons from them as an organization.

That said, there’s one thing worth noting. The average SAMM scores appear surprisingly high. Based on what we’ve seen in the field, I would expect a global average below 1.0. One possible explanation is that organizations tend to share their results only after reaching certain maturity milestones in their application security programs skewing the benchmark.

Read here the complete analysis of the OWASP SAMM benchmark.

Qualitative Findings

The root of most application security failures is simple: security is often treated as a mix of compliance and quick fixes. The quick fixes are usually driven by tools. Teams with advanced tooling tend to over-rely on it. As long as the high and critical vulnerabilities are addressed, they assume they’re in a good shape. For everything else teams often create some compliance documents (nowadays often generated by AI) just to keep the assessors happy.

But in reality, an effective Application Security program is built on people and processes. Tools come last and they assist people and processes, not replace them.

Let’s take a closer look at the key issues we encountered during our assessments.

Lack of a crystal clear understanding of application risk

There’s no such thing as “100% secure” as security is not absolute. Application security is about understanding risk and applying the right controls (which are people, processes or both). Yet in our assessments, we have rarely encountered teams with a clear view of their application risk. Some organizations are maintaining a CMDB record, which is a great starting point. However teams would often say everything we do is equally important. That is as likely as getting struck by lightning on a cloudless day.

A pedantic expert will tell you that “without a risk-based view of your application portfolio, you can’t prioritize”. I would argue that even a clear listing of priorities driven by the CMDB records is not enough. An application risk profile should create a shared understanding of what’s tolerable and what’s unacceptable. More importantly, your entire team should be aware of that. Developers and QA engineers need to know where the red lines are. It’s like sending your football team onto the field without telling them which goal is theirs.

Sample application risk assessment methodology

Here is a very basic application risk profile assessment questionnaire and the outcome of that assessment. My recommendation is to start with something as simple as this, but gradually move towards something more systematic based on e.g., FAIR or NIST SP 800-30.

Risk assessment questionnaire

- Are there any specific compliance requirements for the application?

0 = none, 5 = GDPR only, 10 = more than GDPR - How sensitive is the data handled by the application?

0 = public data, 10 = highly sensitive - What is the potential reputational impact to the customers in case of a breach?

0 = no impact, 10 = we are losing the customer - What is the potential reputational impact to our organization?

0 = no impact, 10 = substantial impact on the bottom line - What is the level of security knowledge within the development team working on the application?

0 = no security awareness, 10 = expert-level - Can external users register and use the application?

0 = no, 10 = yes - What are the availability requirements?

0 = minimal, 10 = mission-critical - Is product owned by the organization?

0 = no, 10 = yes

The risk profile is Low (scores < 35), Medium (36-60) or High (61-80).

| Product/Solution | Compliance | Data sensitivity | Customer reputation | Org reputation | Team security knowledge | External users | Availability | Own product | Total | Risk level |

| Quantix | 5 | 3 | 5 | 3 | 6 | 0 | 2 | 0 | 24 | Low |

| Strivix | 10 | 10 | 10 | 10 | 10 | 0 | 2 | 10 | 62 | High |

| Clyro | 5 | 8 | 5 | 6 | 8 | 8 | 5 | 10 | 55 | Medium |

| Nexora | 5 | 7 | 10 | 10 | 10 | 10 | 2 | 10 | 64 | High |

Treating threat modeling as a bureaucracy

Application risk assessment is closely related to threat modeling. In fact, my recommendation is to combine the two or at least use the risk assessment outcomes as an input for the threat modeling sessions. At this point, you might be thinking, “gosh, that sounds so complicated”. If your risk assessments or threat modeling sessions are taking longer than a couple of hours, you are probably not doing them right (with some exceptions, of course). Also, you are never truly “done” done with either of these activities.

Let us look at the most common anti-patterns we have encountered during our assessments. Interestingly, some of these are already highlighted in the Threat Modeling Manifesto.

The hero threat modeler

Threat modeling should be a team exercise. That creates awareness that is essential for the success of your application security program. It also brings a variety of viewpoints. Asking one person to do the threat modeling reduces threat modeling to an architectural assessment. There is nothing wrong with doing architectural assessments, but you can’t call that a threat modeling.

Outsourcing threat modeling

Some teams try to bring in external experts to handle threat modeling on their behalf. The logic is simple: it works for penetration testing, so why not reuse the same model?

There are a few reasons this approach doesn’t translate well. Firstly, external teams rarely have the deep context needed to understand how your application actually works. Getting them up to speed is time-consuming and expensive. Secondly, outsourcing threat modeling defeats one of its primary goals, i.e., building awareness within your own team. If your developers, architects, and product owners aren’t involved, they won’t develop the mindset needed to identify and prevent threats in the future.

Threat modeling is not a deliverable. It’s a way to level-up your team’s security awareness above all.

Tool-driven and AI-driven threat modeling

Finally, many teams fall into analysis paralysis the moment they get into actual threat modeling. The hope is that a shiny tool or some AI-powered automation will make the process painless. That’s like teaching someone to drive using a Formula 1 car – overkill and likely a crash.

In my experience, threat modeling tools are often overrated. Even basic diagramming tools can quickly become a distraction. Teams spend more time figuring out how to draw the DFD than thinking about what could actually go wrong. I’m not against tooling or AI. They can add value, but only after the team has done a few real sessions using pen and paper. The goal should be shared understanding and critical thinking, not polished diagrams or autogenerated threat lists.

Sample threat modeling methodology

Here’s a lightweight, repeatable approach you can apply to any application. I’m simplifying a few steps, but it doesn’t need to be much more complicated than this.

- Assemble your team. Bring your team together for a brainstorming session of up to 2 hours long. At a minimum, include one person from each of the following roles: senior developer, QA engineer, architect, business analyst.

- Share the application’s risk profile. Start the session by reviewing the risk profile of the application. Be explicit about the types of events or threats that are unacceptable vs those that are tolerable. This sets the tone and priorities.

- Build a simple Data Flow Diagram (DFD). Draw a DFD that clearly shows how the application works. Skip the overly detailed technical layers. Once the team starts hunting for threats.

- What could go wrong? With the diagram in front of you, ask the team “what could go wrong?”. Focus on the big picture: risks, attack paths, trust boundaries. Don’t fall into the weeds of specific vulnerabilities. Note that during this process you could expand your DFD or even focus on a specific portion of the system. The goal is to find threats.

- Track the findings. Add each identified threat or concern to your team’s issue tracker or backlog. Assign follow-up owners where needed.

- Make it a habit. Repeat this process ideally during the sprint planning. If that is not an option, every 6-12 months should do.

For more insights on threat modeling please take a look at Toreon’s world class threat modeling approach.

Ignoring security requirements

Throughout our assessments most teams would say that they do consider security requirements during the analysis phase. For instance, when a specific functionality is discussed the customers might throw in an additional security constraint or perhaps the feature has tangential authentication and authorization requirements. The truth is that security requirements are often entirely absent from the broader set of specifications that guide design, implementation and testing. Teams focus on features. Business priorities dominate. Security requirements are never treated as first class citizens and that is a hugely missed opportunity.

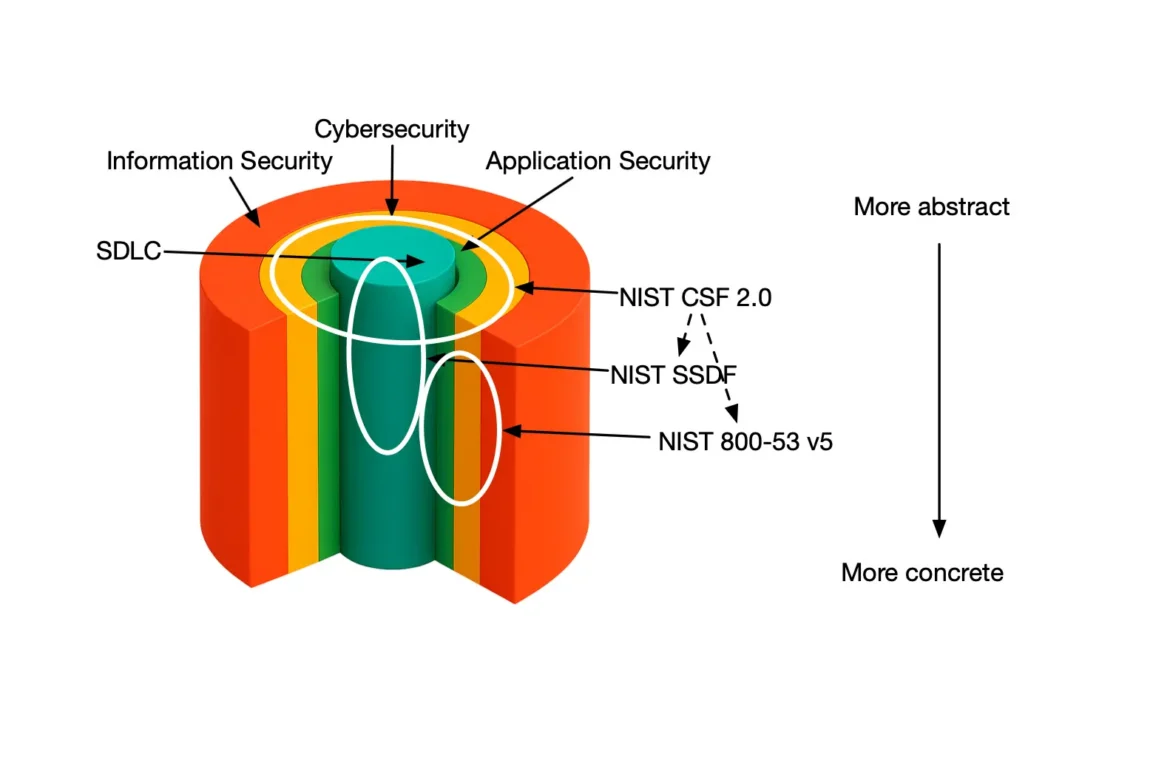

Here is a nearly mathematical proof why security requirements are the key to a successful Application Security program:

- Your SDLC revolves around requirements. They drive design, development, and testing.

- Requirements are the common language across all stakeholders. Analysts and business teams define and prioritize them. Architects and developers implement them. QA verifies them.

- Adding security as requirements has a nearly zero overhead, but delivers maximum impact.

- Every implemented security requirement makes your system more secure by definition.

If only there was an easy way to start using some template requirements?

Simplify security requirements with OWASP ASVS

OWASP ASVS is an excellent starting point for your Application Security program. ASVS might feel large and overwhelming, but you don’t need to tackle all at once. Here is a simple way to get started:

- Pick a small, relevant subset of ASVS requirements based on your application’s technology and risk.

- Inject those requirements directly into your sprint planning.

That’s it! Your teams already know how to implement and test requirements. Chances are, some of these requirements are already in place. Perfect, make sure to write test cases for those and automate them. Expand the list as your team’s bandwidth allows.

Make sure to check out this OWASP Global AppSec recording where I dive deeper into this approach.

Over reliance on security tooling

Bad news for some, no news for others: security tools are not going to solve your problems. Based on my experience, they often introduce new ones. Here are some of the “toolmares” we have seen.

Note that this section focuses mainly on tools that scan for vulnerabilities by analyzing your source code, dependencies, deployed applications, and infrastructure.

An overwhelming number of findings

A false sense of security

Security dashboards don’t reflect reality

Tools to optimize processes and support people

Focus on vanity metrics

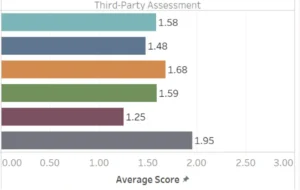

Letting tools define your metrics

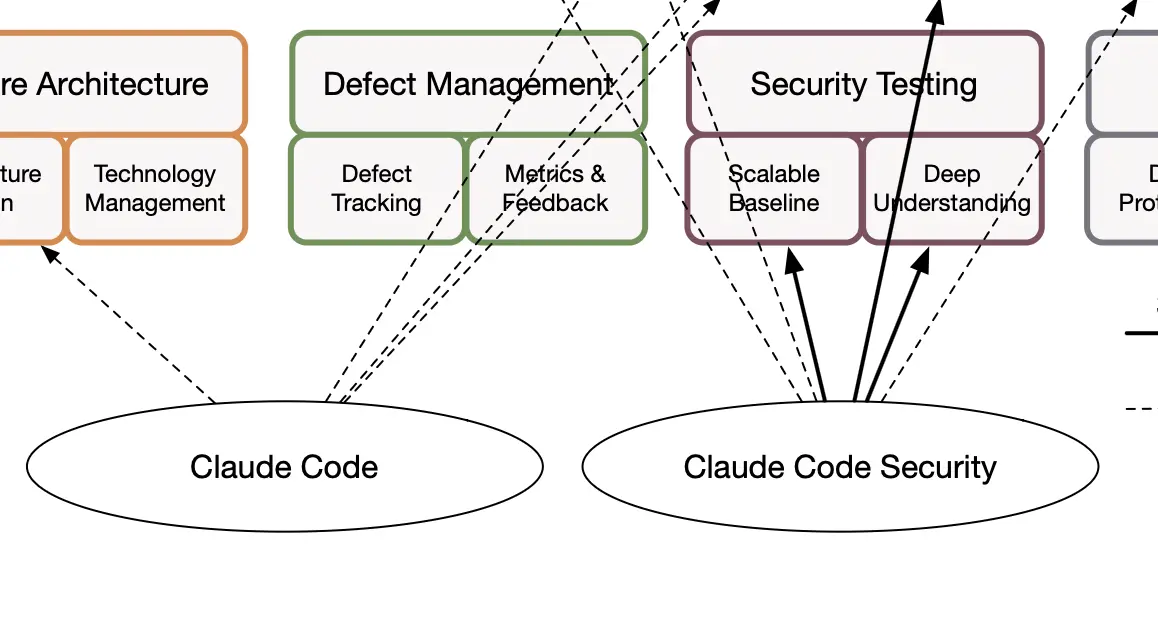

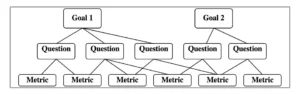

Most teams we have seen would rely on metrics that look great on paper provided by their security tooling. The teams and even the whole organization would then focus on improving these numbers to meet certain targets. Unfortunately, that is completely backwards. Meet the Goal-Question-Metric (GQM) framework.

The idea behind GQM is relatively simple. Teams should start with specific goals defined for an object for a specific reason with respect to a model of quality. For instance, we want to reduce the risk of getting breached due to a known vulnerability in production. Then teams should define questions in order to measure the progress towards meeting those goals. Only then teams should focus on picking metrics to quantitatively answer those questions.

Using CVEs and CVSS scores as a proxy of risk

The most commonly used security metrics are built on CVEs with CVSS scores, both of which come with a lot of issues.

CVEs are not vulnerabilities until proven exploitable. That is at least based on the MITRE‘s own definition of a weakness vs vulnerability. How often does a CVE end up being exploitable? I am not aware of any scientific work on this. However a recent survey on a subset of CVEs has demonstrated that 34% of the CVEs were never confirmed or even disputed by their maintainers.

A very illustrative example is a critical CVE-2020-28435, which reports a command injection vulnerability in a JavaScript library that wraps the ffmpeg command-line tool. The library exposes a function called execute, which simply passes arguments to the shell by design! Sanitizing input would break the functionality. Yet it still received a high CVSS score and was flagged as critical, despite posing no unexpected risk in its intended use.

CVSS scores have at least 2 issues. First of all, several academic studies have shown that CVSS scoring is highly subjective. Different experts often assign different scores to the exact same vulnerability, leading to inconsistent assessments. More importantly, the final CVSS score is a heuristic, not a measurement. It’s derived from a vector of ordinal categories, such as attack complexity, impact on confidentiality, etc. The CVSS formula translates these attributes into a numeric score to make comparison easier.

Hence, a dashboard that is built on top of CVEs using CVSS scores is fundamentally flawed at least from a scientific perspective.

Siloed security teams

Application security starts with people. How you work with them determines whether your AppSec program actually works.

We consistently saw another application security failure across organizations, namely security is still viewed as the responsibility of the security team. Everyone else builds and ships, the security team is expected to “secure it” afterwards. Even if it works, it doesn’t scale. Security teams are the enablers who help your team build secure habits, strengthen their processes and introduce the right tooling to help them. They are there to teach you how to fish, not bring you the fish.

Take penetration testing as an example. Pen testing is something that your own team can rarely do (I disagree with this statement, but let’s leave that discussion aside for now). Hence, external experts are involved to do find the vulnerabilities and write a nice report. Most teams we have assessed had a plan in place to mitigate the findings, but not to learn from them. But that’s a missed opportunity. Every security finding should be a trigger for improvement. Not only in the code, but in how the team works. A team serious about security will revisit training to fill in knowledge gaps. They’ll search for similar patterns in other parts of the codebase or other systems. They’ll update their tests to catch those classes of issues earlier. And they’ll refine their security requirements to prevent similar vulnerabilities from reappearing.

Security champions to the rescue

The most effective way to break down silos and embed security into daily engineering work is by building a security champions program. This is how you make security go viral within the organization. While the program should be driven and supported by the security team, its success depends on empowering individual development teams. Every dev team should have at least one dedicated security champion who bridges the gap between security and engineering, brings awareness to the rest of the team, and ensures that security becomes part of the conversation at every stage of development. Champions scale security knowledge. And over time, they transform security from a bottleneck into a habit.

Conclusions

Over the past three years, we’ve conducted more than 40 systematic OWASP SAMM assessments across a wide range of organizations. In this blog, we’ve shared the most common application security failures we’ve observed in the field. The good news is that most organizations are clearly investing in AppSec and giving it the attention it deserves. The bad news is that some of those efforts are misdirected leading to frustration and inefficiencies.

At the heart of these problems lies a deeply rooted misconception of confusing compliance with security. Compliance focuses on passing audits. Security focuses on reducing risk. Tooling is essential, but it also ends up contributing to the problem. Too many teams let tools define their processes, instead of building strong processes first and choosing tools that support them. The tool-first mindset leads to noisy dashboards, false confidence, and a focus on vanity metrics rather than real outcomes.

Official resources

- OWASP SAMM

- OWASP DSOMM

- OWASP ASVS

- FAIR

- NIST SP 800-30

- BSIMM

- CVE-2020-28435

- Threat Modeling Manifesto