Updated: 1 December, 2025

29 July, 2025

About 4 years ago I have joined the OWASP Software Assurance Maturity Model (SAMM) project as a core team member. Ever since I have been regularly coming across the OWASP DevSecOps Maturity Model (DSOMM). It seems that many people have the impression that SAMM is at odds vs DSOMM. But is that so? What are the key differences between the two frameworks? Who are the key stakeholders in the context of these frameworks? Which framework shall you pick as an organization? In this blog, I will dive deep into the key differences of the two frameworks.

I have been coming across so much AI-generated noise lately that I am officially allergic to it. It is also very clear that AI can never figure out these insights on its own without some serious prompting. So I have decided to keep AI out of this. AI has not touched a single line in this blog. Enjoy my human mistakes!

This blog expresses my personal experience and opinions, though I have received some qualitative feedback from the DSOMM team and updated the blog accordingly.

Frameworks to manage application security

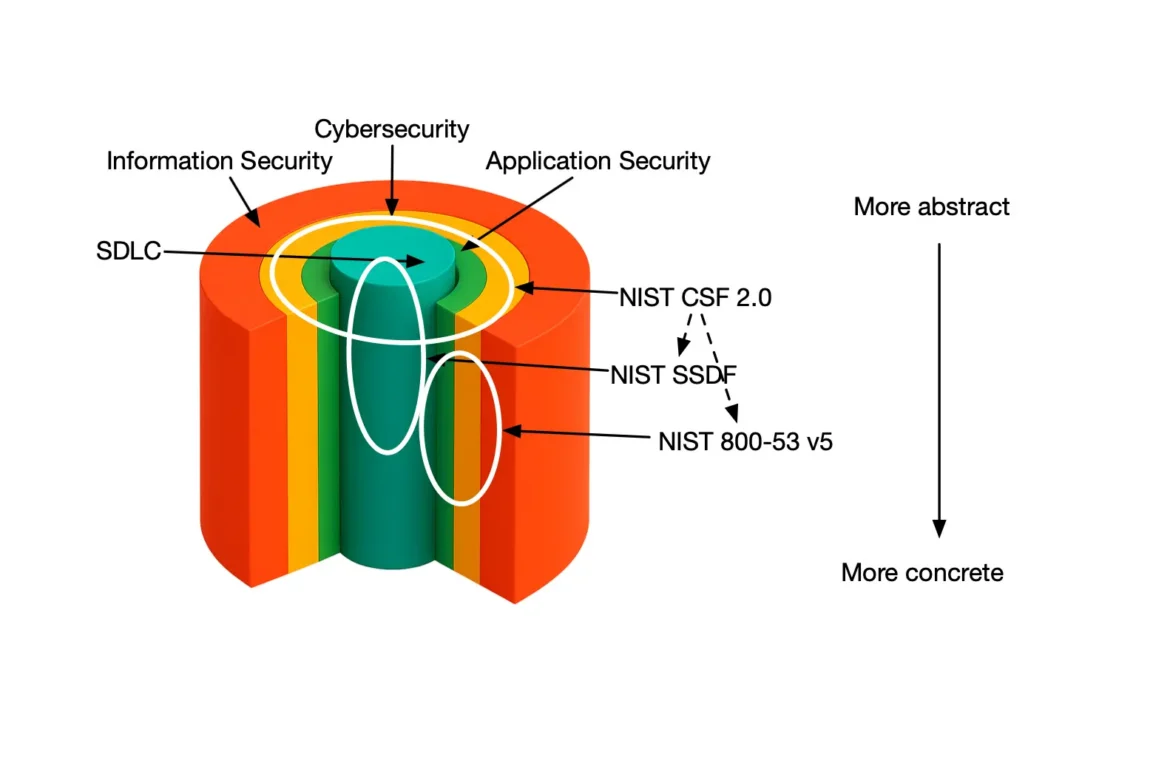

Honestly, it took me quite some time to figure out what a framework is. It is simple, really. A framework is a structured set of best practices. In today’s world, if organizations want to deliver secure software systems, there is not much choice aside from using a framework.

Maturity models are a particular type of framework. The former acknowledge the fact that organizations are very slow to change. A maturity model allows for a smoother and more incremental transition from zero to hero. A “hero” in application security is informally defined as an acceptable level of risk.

OWASP SAMM

OWASP SAMM is a maturity model that focuses on security best practices in the software development lifecycle. I can probably write a book on SAMM, but here are the key facts around SAMM that are relevant in this blog:

- SAMM is prescriptive and tells you what to do. It doesn’t really say how to implement each activity, though. This is by design to make sure that SAMM is applicable for any development project, team, organization, no matter the size, tech stack, development process, etc.

- There are 5 top-level categories in SAMM called business functions that represent well-known topics in the software development process. These are topics that resonate well with stakeholders involved in the software development lifecycle (SDLC).

- At the lowest level of abstraction, there are 30 streams. Each stream represents a particular aspect of security within the SDLC. Maturity levels are, in fact, represented at the stream level.

- SAMM has structured the maturity levels in difficulty. Level 1 (ad-hoc) is typically easy to achieve and often creates a lot of awareness in the team about a particular topic. Level 2 requires a systematic and more rigorous approach. Finally, level 3 is about mastery and represents a well-integrated approach with feedback loops to other SAMM activities in the SDLC.

If you want to know more about SAMM in detail please check out this blog that also contains a full self-paced SAMM Fundamentals Course completely free of charge.

OWASP DSOMM

OWASP DSOMM is a maturity model that focuses on security best practices in DevOps processes. Here are the key facts about DSOMM relevant for the comparison with SAMM:

- DSOMM focuses on security in the context of DevOps. I won’t try to define DevOps in this blog as that will take us too far. The main practical fact of the DevOps philosophy is to maximize automation. DSOMM focuses on security in the context of that SDLC automation. For the less technical people, think about an artisan workshop where a master chocolatier makes chocolate. The chocolatier has been working relentlessly for decades and has mastered the whole process. While all of that sounds nice for your chocolate experience, it rarely works well in an engineering discipline. DevOps is about turning that artisan workshop into a factory with full automation. DSOMM is about making sure nobody can mess with the factory set up and ruin the chocolate.

- DSOMM also has 5 top-level categories called dimensions. Each category has sub-categories that are then refined into maturity levels with activities.

- DSOMM has 5 maturity levels that target a smooth transition to a secure DevOps SDLC.

If you want to know more about DSOMM please check out this blog that my colleague Nicolas has recently written.

DSOMM vs SAMM: Comparative analysis

Honestly, the difference between DSOMM and SAMM isn’t very clear even within the OWASP community. DSOMM positions the project as something to be used by technical teams, while SAMM is for security experts. But is that so? We will provide an in-depth comparison in the remainder of this section.

Structure and overlap

Both SAMM and DSOMM have quite some similarities and overlap. In fact, there has been quite some cross-project pollination between the two projects. DSOMM actually references SAMM for the Education and Guidance sub-dimension. DSOMM also leverages OWASP’s ASVS as actual control implementations for Application Hardening. Using SAMM’s business functions concept, there is a substantial overlap in the Implementation and Verification business functions. Somewhat less in Design and Operations. Governance practices like strategy, corporate metrics and compliance management is something that is largely a SAMM only concept. For an in-depth mapping please visit.

SAMM has a symmetrical structure, while DSOMM does not follow this idea. There are even missing maturity levels for some sub-categories. This makes SAMM mathematically and practically nice to work with. The downside of this approach is that not all activities in SAMM carry an equal weight. For instance, Data Protection is much easier to implement compared to, e.g., Threat Modeling. DSOMM does not have to deal with this, and the activities seem to be more balanced. Missing maturity levels is also a practical way to make sure that there are no activities that might come off as artificially created.

Stakeholders and bootstrapping

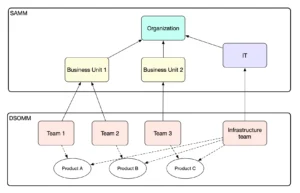

The list of stakeholders involved in SAMM is quite broad. Siemens in collaboration with Maxim Baele from the SAMM core team has recently created the SAMM Skills Framework providing an in-depth zoom into how various roles are involved in a possible SAMM implementation. The list of roles is extensive and involves practically everyone in the organization. That is not surprising though, security is everyone’s job.

I am not aware of any similar analysis created for DSOMM. While DSOMM is more focused on DevOps, I would still expect a substantial set of roles involved in a DSOMM implementation similar to SAMM. Hence, the SAMM Skills Framework could be easily translated into something similar to DSOMM.

I see two key differences between SAMM and DSOMM when it comes to the stakeholders’ involvement.

Firstly, while DSOMM requires a large number of stakeholders, most of the work will be done within the DevOps teams. Hence, DSOMM implementation will not require as much collaboration across stakeholders at least at the lower maturity levels. SAMM is broader and has a much larger governance chunk. Thus, stakeholder collaboration is required much earlier in the adoption process.

Related to the first issue, DSOMM is much easier to start using as a technical guide. DevOps teams are less likely to need formal approval from the upper management to improve the security of their build and deploy pipelines. This means that ambitious DevOps teams can bootstrap DSOMM in the trenches and then move to securing the management buy-in. That is much less likely to work with SAMM.

Summary: DSOMM lives closer to the DevOps teams. Hence teams can more easily bootstrap DSOMM and get the management buy-in later by demonstrating early successes. SAMM is unlikely to be successful unless implemented in a more rigid top-down fashion.

The “not applicable” issue and scoring

One of the most common questions around SAMM is whether everything is really applicable to everyone. SAMM considers everything as applicable. It might not be applicable today, but things change. Take for instance supplier security that is about making sure you vet your outsourced development teams. For companies that do not work with outsourced teams, this item seems not applicable. Clearly, your company might change its stance and start working with outsourced development. SAMM recommends focusing on improving the security posture of your organization, rather than chasing scores. A more recent recommendation is to use the concept of target postures, something that is supported in the SAMMY and Open SAMMY tools. You should then try to maximize your percentage to target score rather than the absolute score.

Initially I always thought that DSOMM has a philosophically different view. However after talking to Timo from the DSOMM team it seems that DSOMM is not much different from SAMM when it comes to the not applicable issue. There are certain activities in DSOMM that might not be as relevant, just like in SAMM. Nonetheless claiming they are not applicable is not in line with model’s philosophy. It might not be relevant today, but it might become relevant tomorrow. Hence, the idea of a target posture that was embraced by the SAMM team would work here as well.

Summary: SAMM offers a clear scoring strategy that allows measuring current security posture and planning and demonstrating improvements. DSOMM has no scoring, but it could be extended to offer something similar (both Open SAMMY and SAMMY tools have implemented this idea). Any activity might not be applicable today but become relevant tomorrow. So “not applicable” is not really a thing neither in SAMM nor DSOMM.

Benchmark

Something closely related to the “not applicable” issue is the SAMM Benchmark. One of the greatest advantages of SAMM is the benchmark that will allow companies to compare themselves to other similar-size and industry firms. The project has actually released already 2 versions of the benchmark with an average SAMM score of 1.44. Unfortunately, the benchmark has at least 3 issues when it comes to the science of metrics.

- The individual benchmark submissions might have a reliability issue. I have been involved in over 50 paired SAMM assessments with 2 other SAMM core team members. We rarely end up with the same score. Though the scores we do end up with are close.

- A SAMM assessment nearly always requires some additional interpretation. This means that a direct comparison of two teams having the same score might have validity issues.

- More importantly though, there are construct validity issues with SAMM. You can attain a score of 2.5, which is very high and at the same time have less security (i.e., have more risk) than another team who has a score of only 1.88.

Despite these issues, organizations consider the SAMM benchmark as a key added value of the project.

With the proposed scoring for DSOMM in SAMMY, organizations could create internal benchmarks. However, having an external benchmark is not currently feasible.

Summary: SAMM offers an official benchmark involving over 30 data points. DSOMM currently does not have any benchmark ambitions. As an organization you should interpret the global benchmark with a pinch of salt though. An intra-organizational benchmark would make more sense as it would allow an objective comparison across teams within the same organization.

Change cadence and versioning

SAMM is a very mature project and stable when it comes to releases. For the 15 years it has been around it has had 2 major versions (1.x and 2.x). The current version 2.1 has been around for at least 5 years though the team is working on a next version.

DSOMM has been around for 9 years already. As opposed to SAMM though, DSOMM changes relatively frequently, and it does not keep track of any versioning. Some of the changes break backwards compatibility. From a philosophical perspective, DSOMM does not see any issues with this as it advocates using a custom implementation for your organization. However, if your goal is to report 50 teams’ DSOMM progress within a large organization, you might want a version freeze.

Summary: While SAMM is a very mature and stable project, DSOMM could benefit from a more rigid versioning and release cycle.

Generalization vs specialization

SAMM, by design, was created to be very versatile and fit any development process, organization size, or technology stack. This means that the activity descriptions and quality criteria are quite generic. The downside of this is that SAMM has a steep learning curve and requires some additional interpretation. Based on my experience, this is one of the key disadvantages of SAMM. However, the SAMM core team offers a plethora of resources and near-real-life support in Slack to answer any questions. On the upside, the key advantage of SAMM is that it is applicable to virtually any development process.

DSOMM is focused on the DevOps philosophy, hence by definition, it is very specialized. The main advantage of this is that teams can easily interpret and implement DSOMM. Fun fact: as a SAMM core team member, I have introduced SAMM to Codific. So I had to interpret many of these activities for our realities. In retrospect, I should have started from DSOMM and expanded towards SAMM. The main downside is that DSOMM sometimes refers to technologies and architectures that are too specific (e.g., microservice architectures, containers). If a team is working on delivered software, for instance, many of these might not be applicable.

Summary: SAMM is generic and fits any development process, tech stack and organization size. It is harder to interpret DSOMM is more suitable for DevOps teams working on cloud and mobile applications.

From zero to hero in practice

Both SAMM and DSOMM are maturity models recognizing that security requires consistency and incremental improvements over time. DSOMM seems to offer a much more refined progression towards maturity. SAMM has coarser buckets, and it could be hard to interpret. That is great if you are interested in the high-level overview. DSOMM, on the other hand, is packed with concrete advice. You could even use DSOMM as specific guidance for implementing many SAMM activities.

Looking back at the many SAMM assessments I did, it seems that you could trick your way around SAMM scoring by a shallow interpretation of the quality criteria. Hence, you might end up with an artificially high score yet lack key security processes and activities. Clearly, that is a much less likely scenario for someone who is familiar with SAMM in-depth and is focused on improving the actual security. Nonetheless, in my experience, many teams, especially in a corporate mandated setting, are trying to score as high as possible rather than reduce their actual security risk as much as possible.

DSOMM is much more practical and offers no official scoring mechanism. As I have mentioned in this blog, I am a strong proponent of introducing a scoring mechanism in DSOMM. For each activity, you could have a “Not implemented” and “Implemented” scoring key with 0 and 1 score, respectively. Gaming the system with DSOMM is much more difficult. Many activities are easy to verify. We have even started working on a proof of concept together with Smithy to automate some of these verifications.

Summary: Introducing practical application security is a lot easier with DSOMM. SAMM needs some interpretation and guidance for each activity and has a relatively steep learning curve.

Security metrics

I have recently become very passionate about the topic of security metrics. It seems that as an industry we know very little about security metrics. That is surprising given the amount of research on defect metrics from over 30 years ago. At the end of the day security defects are a subset of all defects. I have even created a self-paced and completely free introductory course on the science of metrics.

Both SAMM and DSOMM focus on metrics as they recognize the importance of this topic. SAMM provides generic guidance on how you should structure your overall security program around metrics. DSOMM offers quite some metrics to measure the DevOps processes. Unfortunately the metrics sections in both frameworks have room for improvement in my opinion. A strong metrics driven approach should always start from specific goals, questions to support the decision making and metrics to answer these questions. The Goal-Question-Metric framework that is a proven approach in defect management has demonstrated that a top-down approach is a must when it comes to metrics. I strongly believe both SAMM and DSOMM should critically reflect on their metrics sections and make sure they align with scientific research, rather than industry established metrics that are questionable in my opinion.

Summary: Both SAMM and DSOMM could improve their metrics-related sections by incorporating more rigorous scientific methods as opposed to industry established metrics driven by tools.

DSOMM vs SAMM: Side-by-side comparison

This section offers a brief side-by-side comparison of the two frameworks.

| Aspect | DSOMM | SAMM |

|---|---|---|

| Target audience | Teams looking to create a more systematic approach to manage DevOps best practices. | Application security program managers looking to set up a systematic and scalable approach to manage security in their software products. |

| Versatility | Focused in the context of DevOps philosophy. Includes practices that might never become relevant for any product. | Generic and applicable to any organization size, industry and technology domain. |

| Measurability and scoring | Measurability is currently not in scope, but could be easily added. | Measurable by design. |

| Benchmark | No benchmark goals (yet). | Official benchmark updated yearly. |

| Bootstrapping | Suitable to bootstrap in a bottom-up fashion. Teams can start using DSOMM and gradually expand towards making it a strategic corporate initiative. | Most suitable for a top-down introduction in an organization. Without the buy-in from the upper management the SAMM implementation is likely to fail. |

| Maturity | OWASP “Lab project” with a reviewed deliverable of value. | OWASP “Flagship project” with a strategic value. |

| Update cadence and versioning | Does not have a clear versioning and is updated relatively frequently. | Very stable with a rigid versioning. |

| Implementation complexity | Easy to understand and implement. Provides clear actionable guidance for technical teams. | Relatively steep learning curve and open to interpretation. |

Combining SAMM and DSOMM

Comparing SAMM and DSOMM is relatively straightforward once you get to know both projects. However that still does not solve the question I have seen come back often: which of the two frameworks shall we pick as an organization or a team? This section provides some actionable advice to answer that question.

First of all, it is not DSOMM vs SAMM, but DSOMM and SAMM. I tend to agree with Timo: “DSOMM is for technical teams, SAMM is for the management”, though it is an oversimplification. Nonetheless both frameworks have their key audience and stakeholders.

More importantly though, you can use DSOMM as an additional scoring mechanism for SAMM activities. Obviously, you need to be working with DevOps, but so far I have yet to see a team that doesn’t. Given the overlap between the two frameworks (especially in Implementation and Verification) it is possible to use DSOMM and get your SAMM scores automatically filled out for you. We have that partially implemented in the SAMMY tool using the DSOMM <> SAMM mapping from OpenCRE. Official guidance from SAMM and DSOMM teams would be extremely helpful to refine this though.

Finally, DSOMM can be simply used as guidance for SAMM activities. It offers low level details on quality criteria interpretation, which is a real pain especially for less experiences SAMM practitioners.

Conclusion

“What is the difference between SAMM and DSOMM? Which of the two shall we pick?”. I’ve recently taken a deep dive into the DSOMM framework to search for an objective answer to that question. In this blog, I have provided an in-depth comparison of the two frameworks that answers the first question. The second question is a lot more complex, and my actionable advice is twofold.

First of all, it is DSOMM and SAMM. Despite their overlap, the frameworks serve different key stakeholders and should be combined. With tools like SAMMY and standards like OpenCRE, it is possible to unify the frameworks to reduce any accidental overhead.

Secondly, for tech teams looking to assess and improve their application security, DSOMM is a better pick. DSOMM offers an actionable and concrete set of best practices. It offers a much smoother bottom-up progression, not requiring much involvement of the upper management. From an organizational perspective, SAMM is a better pick. It offers a top-down approach for systematically managing the application security posture of an organization.

For organizations looking to attain high maturity for their SDLC DSOMM and SAMM meet half-way perfectly aligning all stakeholders and making security everyone’s job. Needless to say, a tool like SAMMY is instrumental to make this happen from a practical perspective.

Official resources

- OWASP SAMM

- OWASP DSOMM

- OWASP ASVS

- SAMM Fundamentals Course

- SAMM Skills Framework

- SAMM Metrics Course

- Open CRE

- Goal-Question-Metric