Updated: 1 December, 2025

28 February, 2024

Application security requires a systematic approach and requires dealing with software security throughout every stage of the software development lifecycle (SDLC). However organizations typically struggle to create an effective improvement roadmap and end up in the rabbit hole of fixing security tool generated vulnerabilities. We believe that leveraging OWASP Application Security Verification Standard (ASVS) as a security requirements framework as well as a guide to unit and integration testing is by far the best pick in terms of ROI. Using security requirements driven testing and by turning security requirements into “just requirements” organizations can enable a common language shared by all stakeholders involved in the SDLC.

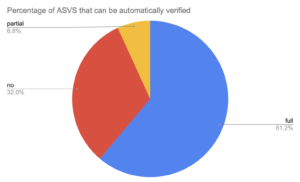

We have analyzed the complete ASVS to determine how much of it we could automate using various testing strategies. Our analysis indicates that 162 (~58%) of ASVS can be automatically verified using unit, integration, acceptance tests. We could increase the verifiability by another 10% by using SAST, DAST and SCA tooling.

We have also designed an empirical study where we have added 98 ASVS requirements to the sprint planning of a relatively large web application. Our team has followed a security test-driven development approach. A test engineer has written unit and integration tests for as many requirements as possible in 8 man-days. Our team has implemented test cases for 90 ASVS requirements. From this effort we have created 7 tickets for which the project did not comply with the standard.

Our study demonstrates that leveraging ASVS for requirements-driven testing can create a common theme across all stages of the software development lifecycle making security everyone’s responsibility.

Watch a recording of this article presented at the OWASP Global AppSec Conference in San Francisco:

![]() Watch on Youtube

Watch on Youtube

Key takeaways:

- Start from ASVS and invest in security requirements that is the lingua franca between all stakeholders.

- Require your test engineers to create unit, integration and acceptance tests for as many security requirements as possible.

- Explicit security requirements provide a hands-on training and awareness for all stakeholders.

- Requirements driven testing removes the silos between sec and dev teams.

- To total cost for adopting requirements driven testing is negligible.

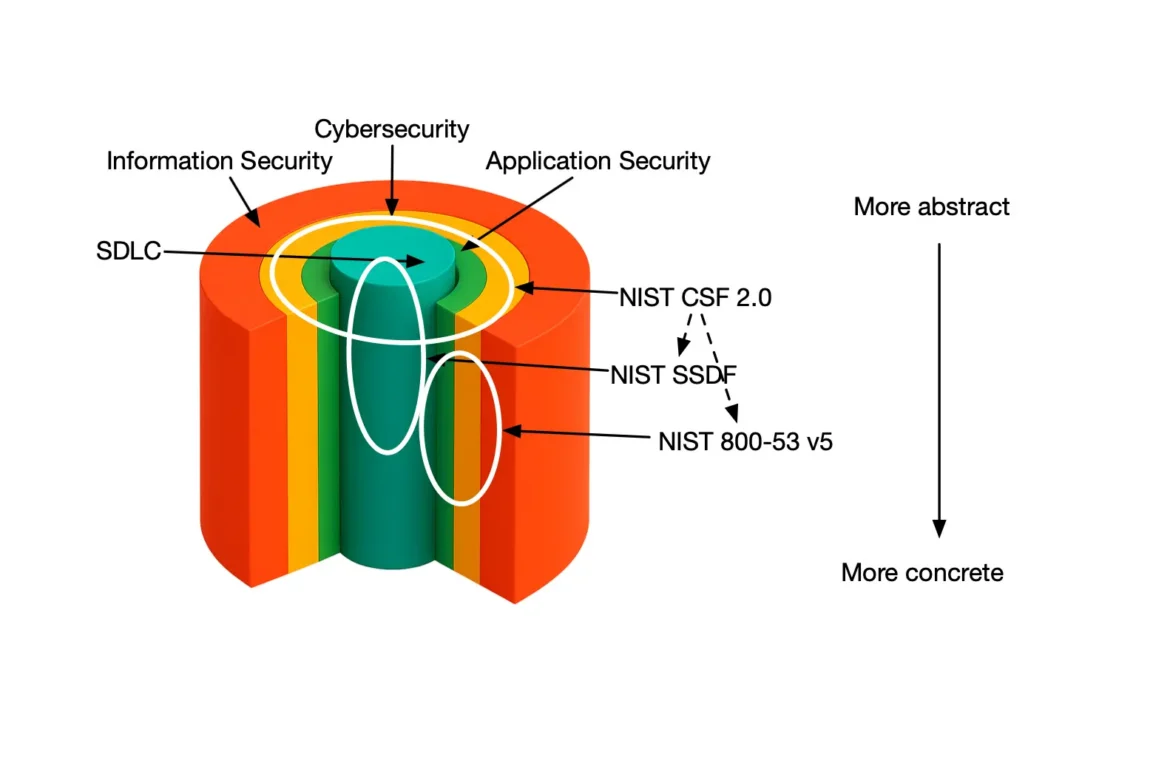

Introduction: security is broad and deep

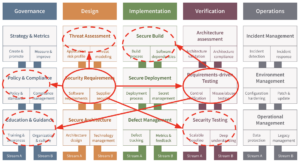

In order to solve the application security challenges organizations need to adopt a systematic approach to dealing with security. In a nutshell that requires dealing with security throughout the complete software development lifecycle (SDLC). A highly simplified version of OWASP SAMM would typically recommend at least the following activities throughout the software development lifecycle:

- Executive management defines and communicates to everyone the organization’s risk appetite and risk tolerance.

- Business analysts explicitly define security requirements when creating the high-level application specification.

- Architects employ security principles and patterns in order to create a (reusable) secure software architecture/design.

- Developers comply with various security policies and implement the security requirements.

- Test engineers verify amongst others the correct implementation of those security requirements.

- Operation engineers deploy the software system on top of hardened components and patch them regularly.

Unfortunately, organizations (especially those with low security maturity) often get overwhelmed by the breadth of security activities and end up focusing on the lowest hanging fruits. Software teams typically leverage penetration testing and various security tools in order to find vulnerabilities and remediate them after the software system has been developed. Obviously, fixing security defects after the system has been developed is much more costly than dealing with it at the design level.

OWASP SAMM is the complete picture, but the how and the ordering remains challenging

OWASP SAMM provides the complete breadth and depth of all security activities that you could implement. However SAMM by design focuses on the WHAT. It explicitly tells us that the HOW as well as the prioritization depends on your organization’s realities. Here are some sample factors to consider when you are implementing SAMM:

- organization size,

- risk tolerance for your organization / application,

- tech stack,

- development process (e.g., agile, waterfall),

- security knowledge level of your dev team members.

The most common question I get after conducting a SAMM assessment is where and how to start improving? The answer is simple: invest in security requirements and mandate the verification using automated test cases.

Invest in security requirements framework for your SDLC

We believe that leveraging OWASP ASVS to enable requirements-driven testing is the best pick for a corporate security strategy. Requirements-driven testing will likely bring the best returns on investment for your AppSec programme. Your organization could actually create a corporate set of standards based on ASVS. Note that you don’t have to leverage the complete ASVS. Start small with the most relevant security requirements. You could also enrich the initial list with security requirements based on your own organization’s realities (e.g., tech stack, application domain, etc).

Adding security requirements to the sprint planning fits naturally with the overall software development process. Dev teams will consider security as “just requirements” that are part of a nice and shiny feature. Test engineers will derive test cases for those security requirements and automate them just like for any other test scenario. As a result your dev and test teams will get their awareness and training in a hands-on fashion, which will have a more lasting impact than mandatory yearly training. Requirements are pervasive and will involve nearly every role involved in the SDLC. Security requirements will enable effective communication between sec and dev teams. Finally, adding this common thread will condition your teams for the higher maturity-level initiatives.

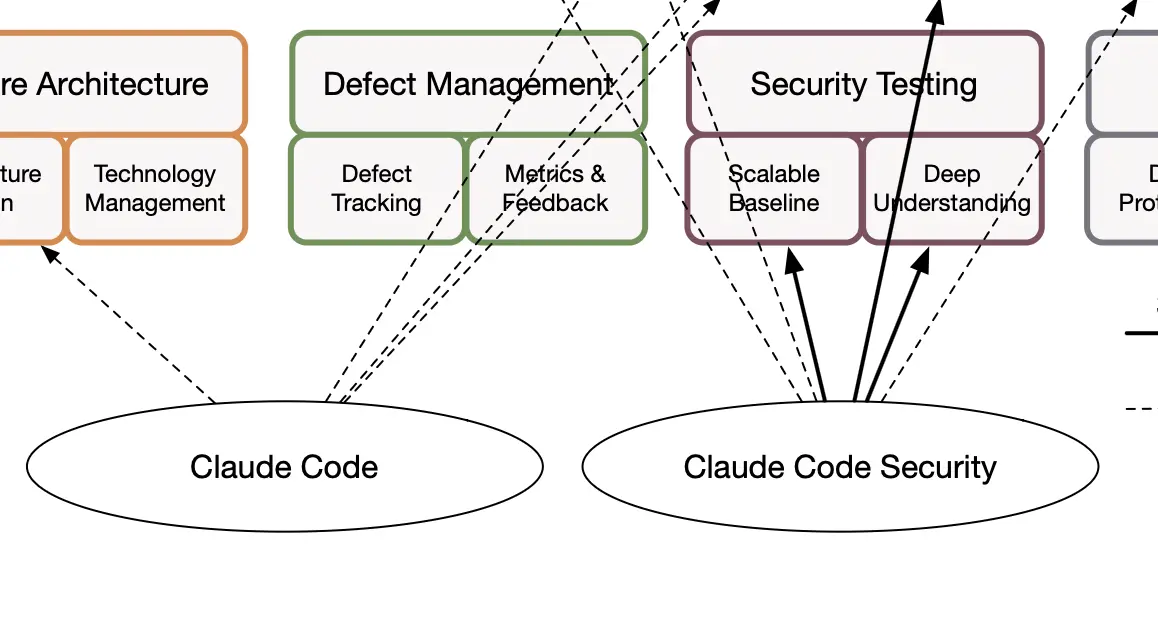

Security requirements driven development positively impacts many other security practices

Introducing explicit security requirements to your sprint planning and mandating their verification with test cases has a major impact on your overall security posture. In this section, we briefly discuss the impact on various practices from SAMM (see figure from the previous section).

Secure Build: Automated security test cases provide additional security checks in your build process

Having automated security checks and failing your build upon finding any issues is amongst the best practices for the Secure Build -> Build Process stream in SAMM. Assuming that most of your security requirements are automated with test cases you will have a very high quality feedback loop in your build pipeline.

Training and Awareness: Ask developers to deal explicitly and systematically with security requirements

“I love creating cool new shiny features for the application I am developing. However, I absolutely hate this training on how to avoid SQL injections and XSS. The technology we use is absolutely awesome and that can never happen. So I will just click through these screens as fast as I can and get back to the real issues.”.

Sounds familiar? I would be surprised if most managers are aware of this issue. If you want to start solving this issue create explicit security requirements and ask your developers to implement them. Ask them to do what they love and they will learn more about application security as a by-product with nearly zero effort.

Threat Modeling: Explicit security requirements will make your threat modeling sessions more productive

Our threat modeling sessions are freestyle brainstorming sessions focusing on what can go wrong in a part of the system we are working on. Once your team is familiar with security requirements your threat modeling sessions will feel a lot more natural to them. They will be more actively involved and they will come up with more realistic threats. At least that is my claim that has yet to be verified.

Security Testing: Security requirements will help you improve your tooling and pen testing

Nearly all organizations are leveraging SAST, DAST and SCA tools in their daily practices. However, based on the recent results from the SAMM Benchmarking Project, companies rarely tune their tools. Tool vendors tune their tools by design to produce as many findings as possible. They want to make sure they don’t miss anything real. Based on my own experience, security requirements are the key to tune your tools (pun intended).

If you are dealing explicitly with security requirements you are more likely to clear the low hanging fruit for the penetration testers. They will be able to focus on more interesting ways to break into the system. This feedback loop is actually bi-directional. You should be also adding any findings from the pen testers back to your security requirements and back to the automated test cases.

More than half of ASVS can be automatically verified, but only about 10% with tooling

In this blog, we present the case of leveraging OWASP ASVS as a collection of requirements that we add to the sprint planning. We subsequently include these requirements in automated unit, integration and acceptance tests. Our goal was to maximize the portion of ASVS that is poured into automated security regression test suites, thereby making security much more tangible for the sec, dev and test teams. Furthermore, we have also investigated how much of ASVS could be tested using SAST, DAST and SCA tooling. Note that we have looked at ASVS across all verification levels.

Our analysis concludes that roughly 58% of ASVS can be at least partially leveraged as “just requirements” and added to the sprint planning. 37% of ASVS can be verified using unit tests, 20% using integration tests and 1% using acceptance tests.

We can extend the ASVS automated verifiability by another 10% by leveraging SAST, DAST and SCA tooling. Note that some ASVS requirements are best tackled using multiple testing strategies. For instance, verifying output encoding should be done by using both unit tests as well as SAST to ensure no unsafe html methods are used. Our study concludes that about 14% of the requirements need more than 1 testing strategy.

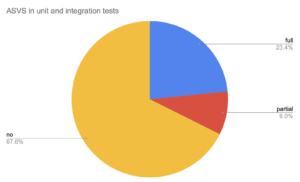

Note that some ASVS requirements cannot be fully verified. We believe you can fully verify 61.2% and partially verify 6.8% of the requirements.

What are the different test strategies for security requirements verification

In a nutshell testing is about making sure the system does everything right and nothing wrong. There are two sides of the story: control-verification and abuse scenarios. Control-verification is about making sure the “happy path” works properly. Abuse scenarios focus on making sure the application properly handles any attempts to break it. There are different strategies to verify the correct requirements implementation.

What is the typical cost and effort for each testing strategy

Manual tests is all about the QA going through the requirements one by one. Often teams also have a Release Readiness Review (RRR) document that specifies all the requirements that have to be tested before every release. Let’s say the QA team has to make sure that the change password feature ensures that the new password is at least 12 characters long. The tester would then go to the dev/acceptance environment of the system, type in a password of e.g., 5,6,11,12,15 characters and make sure the system responds appropriately. Although this strategy might seem very cheap as it will literally take 2 minutes, on a long-term the costs of each testing will start adding up. If we could automate this test we would obviously save time in a long run. Note that manual testing is primarily focused on the control-verification (i.e., the happy path).

Penetration testing (and bug bounties) is the most expensive testing strategy. The main difference is that they employ highly experienced (and expensive) work force to try to find vulnerabilities. Some of the penetration testing tasks could be automated with tools, but that is somewhat outside the scope of this blog. Penetration testing is only focusing on the abuse scenarios.

Both manual and penetration testing are the slowest and most expensive strategies on a long-term. Automation is key here. Once again we have several strategies for automation, namely unit, integration and acceptance tests. SAST and DAST tooling are additional testing strategies that are specific to security testing.

Obviously, all strategies have their place and all of them are useful. However in general unit and integration tests are the cheapest type of tests. This is true both for functional requirements as well as security requirements. In my opinion, acceptance tests are still pretty close when it comes to security requirements. Most importantly though, the cost curve is not linear. Typically unit and integration tests are a whole lot cheaper than e.g., DAST or penetration testing.

Creating unit and integration test cases for security requirements is relatively inexpensive

At Codific writing test cases for security requirements is part of our regular SDLC. In order to back up the claims in this experiment we have designed an empirical experiment for one of our SaaS products (a web application written in Symfony PHP framework). Our experimental design was slightly different from an ideal scenario. More specifically, our development team did not really focus explicitly on implementing any ASVS requirements. As it turned out though, our dev team had actually implemented many of them. So we have added 98 ASVS requirements to a dedicated sprint. We have requested one of our test engineers to write unit and integration tests for these requirements. Strictly speaking we have followed a security test-driven development.

Our team has implemented 90 (32.4%) of the ASVS requirements using unit and integration tests. A number of tests have actually failed indicating the requirements were not (correctly) implemented in the product. The test implementation effort was an 8 man-day time-boxed activity for one test engineer. Hence, we have eventually dropped 8 requirements due to time limitations. A senior security analyst has helped derive and refine the test cases. The total time-investment by the security analyst was about 2 man-days. To put this into perspective, we have spent about 4 man-years on the SaaS product.

Threats to validity for our experiment

Our study has a number of validity threats and we will discuss the top 3 of them. Firstly, our analyst and test engineer were already familiar with ASVS. We also have some ASVS-based unit and integration tests throughout other projects from which we could easily recycle them. Perhaps less relevant, but most of ASVS requirements were already implemented in the SaaS product making the testing somewhat easier. We believe that leveraging ASVS for requirements-driven testing is likely to require more effort in an organization that is not familiar with the standard. However, such organizations will likely have increasing returns on investment.

It is possible that we may have misinterpreted some ASVS requirements and fell prey to the “cargo cult” ending up with shallow implementations.

Finally, some of the automated tests only provide a partial implementation of their respective ASVS requirements, especially where SAST/DAST/SCA tools are to be leveraged.

Experimental data

We have currently published our analysis in a Google Spreadsheet. We plan to publish the actual test cases as well.

Conclusion

To effectively tackle the application security challenge organizations need to deal with security throughout the entire software development lifecycle. However organizations often struggle to find the best strategy to bootstrap that effort. We believe that leveraging ASVS as a guide to requirements-driven testing is the most effective approach to ensure everyone involved in the SDLC is responsible for security. Business analysts will leverage ASVS to create the application specification. Architects will have to deal with these in a very concrete way. Developers will have to implement them as “just requirements”. Finally, test engineers will have to verify them using various testing strategies. Hence, creating security requirements is a non-invasive approach to make security everyone’s responsibility.

Having explicit security requirements in the SDLC creates a common thread that is everyone’s responsibility. ASVS-driven security requirements create awareness in a natural way.