Updated: 26 September, 2025

12 April, 2024

How to use OWASP SAMM for effective communication on security?

Reporting with OWASP SAMM is very impactful when done correctly. This blog is based on first and second hand experience of implementing SAMM (Software Assurance Maturity Model) as a security programme at organizations large and small. We focus on how security leaders at the organization can communicate upwards, how SAMM can help with this, what challenges arise and how these have been mitigated at different organizations. We start from the perspective of senior leadership.

What senior leadership wants.

Senior leadership wants clarity, simplicity, and reliability in the information they receive. Clarity to have good visibility on the situation at hand and its implications. Simplicity in order to easily digest information and know what conclusions to draw. Reliability or the ability to trust the numbers. Yet for each of these criteria there are challenges in the use of SAMM.

How SAMM is really useful.

Reporting with OWASP SAMM can be highly effective. One of the intuitively appealing aspects of the SAMM is its process orientation. It doesn’t dwell on specific events but looks at which processes are in place. As a maturity model it contains much more information than a compliance model. Higher maturity levels are associated with systematic, scalable and automated processes. At the same time it is very simple and clear to report on. It is easy to limit dashboards to one page of KPIs yet this information is very rich and easily understood. So if you measure your security programme with SAMM you should be able to come up with simple, clear and reliable reports for the senior leadership. At least in theory, but does it work in practice? Unfortunately not necessarily. We will discuss some practical problems that come up and how they have been addressed.

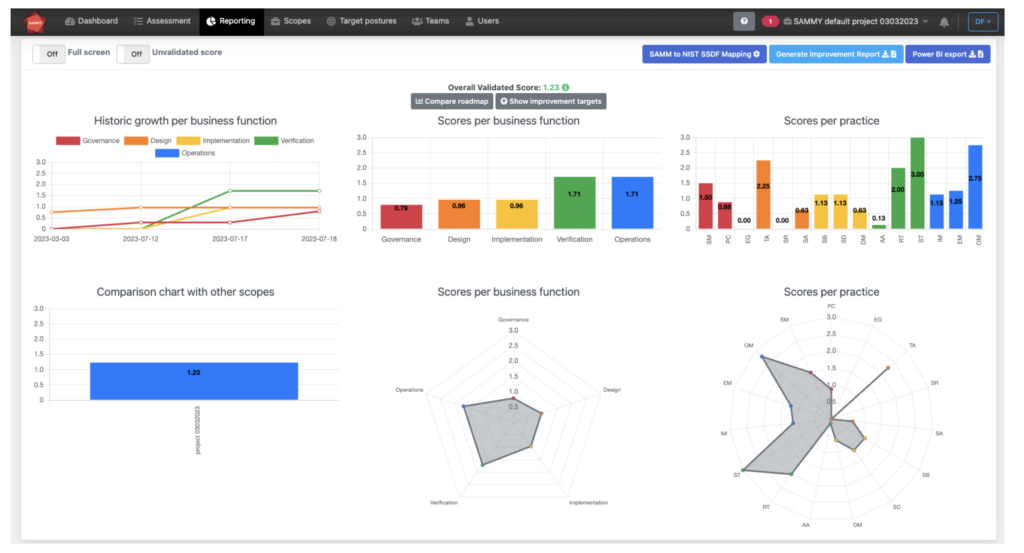

SAMM reporting in SAMMY

Challenge 1: Inconsistent scoring across teams. A reliability challenge.

We see that different teams assess differently and come up with different scores. The model is intended to be generic and to be applicable in a wide range of industries and technological contexts. That is great, but that also means that it is not very specific, there is some room for interpretation, and different teams will interpret differently. Having all assessment done by the same person or team is possible at smaller organizations but impractical or impossible at larger organizations.

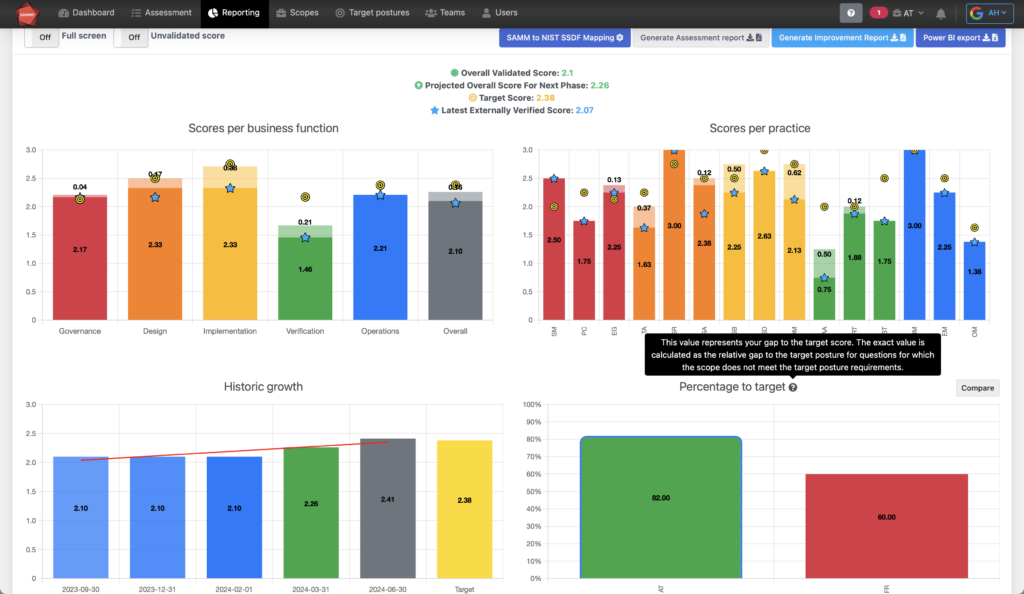

In order to scale consistent assessment we see organizations implement SAMM in a quality control loop. The assessor may be someone part of or close to the business unit or team (scope) who collects all the evidence per stream and creates a scored assessment. The validator is a different role, he checks the evidence stream per stream and validates the scores. If he disagrees with the scoring he provides an argumentation and sends the security activity back to the assessor for re-assessment. The assessor can then either present more evidence to support the score, or lower the score. If necessary this process repeats until the validator accepts the scores. However in practice we rarely see it go back more than once, as expectations become clear with the feedback of the validator. When scores are validated but short of the target maturity for that activity an improvement track is initiated and assigned which brings the activity back to the assessment stage when completed. By having the same person or team doing the validations the reliability problem is mitigated without creating excessive overhead.

Challenge 2: Overall SAMM scores are the wrong metric. A clarity problem.

We all grew up getting graded in school. As there is a point system in SAMM there is a natural tendency for everyone to want to score highly. But the overall SAMM score is a particularly bad metric. First of all maturity level 3 (the highest in SAMM) should never be the overall goal, that would be wasteful. The goal should be set based on the policies which are derived from the strategy and incorporate things such as risk profile, risk appetite, business context etc. So companies set target SAMM scores based on the company policy and strategy. But then teams start managing towards these scores by looking for the best points-return on their effort. Thus teams can end up overshooting on some security activities and undershooting others, leading to an overall SAMM score above the policy requirement but they are not compliant on all activities. This situation creates confusion and undermines clarity.

The solution is the gap to target posture metric, averaging the gaps and ignoring overshoots. It provides a single metric which is easy to report on and a relatively fair comparison between teams or business units even if they have different target postures.

Challenge 3: How does maturity translate to risk? A (lack of) simplicity problem.

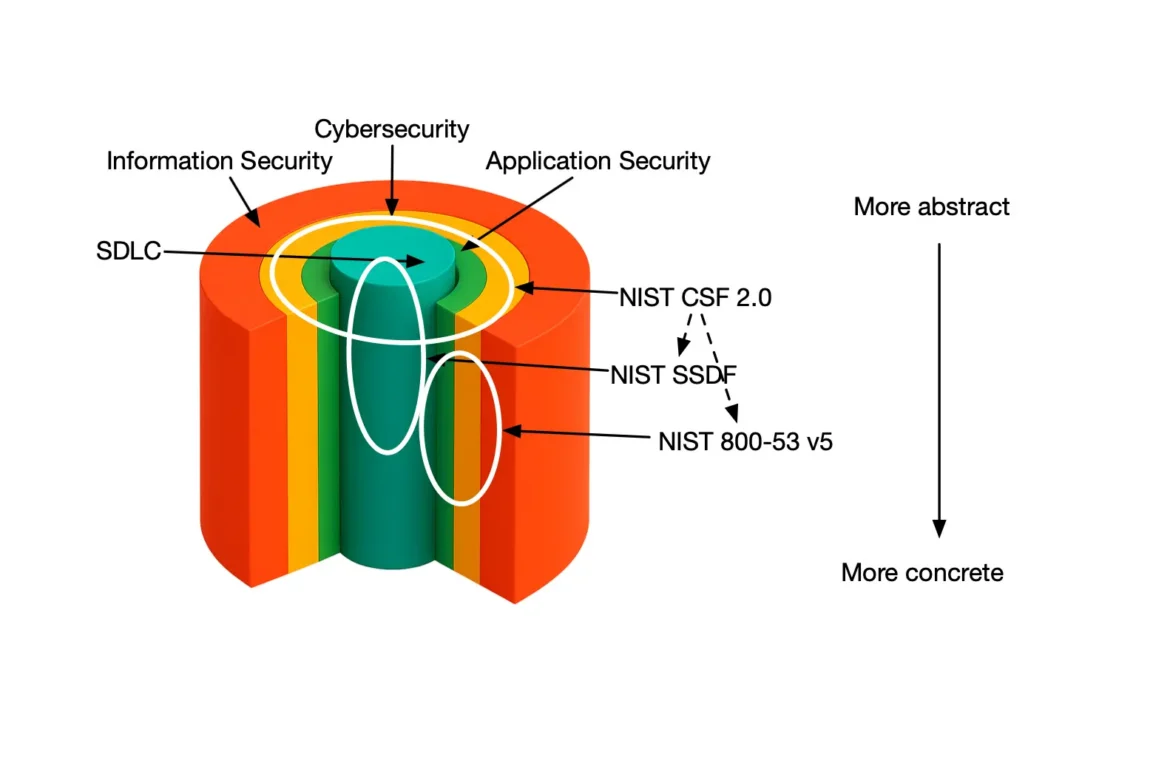

SAMM scores do not have a straightforward relationship with risk. There are many other factors to take into account. Different security activities will be more or less important in different technological environments, and similar threats carry different risks based on the nature of the underlying business. Typically risk is mitigated by compensating controls, but SAMM is not a control framework. It focuses mainly on people, processes and tools. Hence, translating that directly into risk becomes an even more complex issue.

Probability of incidents can be derived from publicly available data. Linking incident probability to maturity on a security activity level is currently being worked on. If the business leaders then provide a reliable estimation of impact cost, for their specific business per type of incident, then we have a robust estimate of risk per security activity in SAMM. It is then easy to look at ROI by comparing the deltas of risk and cost for the marginal level of maturity.

This is the essence of effective reporting with OWASP SAMM:

Software has eaten the world and SAMM is eating application security. From a managerial perspective the benefits of SAMM by far outweigh the drawbacks. Reporting with OWASP SAMM can be really impactful, but is not without challenges.

You can mitigate several of the common practical pitfalls based on the experience shared by the industry today. The keys lie in: 1. Separating the assessor and validator roles. 2. The gap-to-target metric and 3. The ROI calculations.

Sometimes the broader organization heeds the advice of the security team and sometimes it doesn’t, but using SAMM for simple, clear and reliable communication your internal impact takes a giant leap.