Updated: 2 September, 2025

2 January, 2023

Have you ever wondered about the initial steps to ensure data privacy? Do you ever think about identifying threats to privacy? Then you are in the right place. In this blog post, we will cover everything you need to know about privacy threat modeling, featuring insights from two experts in this field, Dr. Aram Hovsepyan and Dr. Kim Wuyts.

Introducing the two experts

Dr. Wuyts is a researcher at the DistriNet group in KU Leuven. DistriNet is a world class research lab in the fields of privacy and security. She has been working in the areas of security & privacy engineering and privacy threat modeling for over 15 years. Furthermore, she is one of the creators of the world renowned LINDUNN privacy threat modeling approach, which is used in the National Institute of Standards and Technology (NIST) cybersecurity framework created by the U.S. Department of Commerce. This framework helps businesses to better understand, manage, and reduce their cybersecurity risk and protect their network and data.

Dr. Hovsepyan is the founder and CEO of Codific. Before leading this innovative cybersecurity company, Aram was also a researcher in the DistriNet group, also aiding in the development of LINDUNN. Within the research lab, he focused mainly on the areas of privacy and security threat modeling.

Below, you can watch the conversation I had with them. The transcript is available at the bottom of the page.

What is privacy threat modeling?

Privacy threat modeling systematically identifies and addresses potential privacy issues in systems and applications. This process includes analyzing the collection, storage, processing, and sharing of personal data to foresee and mitigate potential privacy breaches.

Why is it important?

In the podcast, Dr. Hovsepyan and Dr. Wuyts emphasize the role of privacy threat modeling in identifying and mitigating potential privacy risks before they materialize. This proactive approach is key to maintaining user trust and legal compliance, especially under regulations like the GDPR, which we will discuss later in this article. Organizations that systematically analyze how they handle personal data can prevent privacy breaches, thus safeguarding against reputational damage and legal repercussions. This underlines its critical role in a comprehensive cybersecurity strategy.

How is it carried out?

To understand the process of privacy threat modeling, one must first consider the wider concept of threat modeling. Dr. Hovsepyan explains this using a great analogy about going to the airport to catch a flight. He compares the process to assessing potential risks and preparing contingencies for a trip, involving decisions such as choosing between driving or public transportation and considering possible delays. This analogy effectively sets the stage for understanding privacy threat modeling, illustrating how it involves anticipating potential issues and strategizing accordingly in the context of data privacy and security.

The four essential questions you need to consider

When starting the process of privacy threat modeling, Dr. Wuyts mentions that there are four essential questions you need to ask yourself, based on Adam Schostack’s book “Threat Modeling: Designing for Security”, that is referred to as the Bible on threat modeling. The four questions are:

- What are we working on? This step involves creating a detailed model of the system, understanding all its components and how they interact.

- What can go wrong? This is about identifying potential privacy threats within the system, considering various scenarios and vulnerabilities.

- What are we going to do about it? Here, strategies and measures are developed to mitigate the identified risks.

- Did we do a good enough job? This final question involves evaluating the effectiveness of the implemented strategies, ensuring they adequately address the risks.

Each step requires careful consideration and expertise, often involving collaborative effort from multiple stakeholders to cover all aspects of privacy and security. Nevertheless, coming up with threats can sometimes be a challenge, especially for teams that are less experienced with privacy threat modeling. This is where the LINDDUN privacy threat modeling methodology comes in handy.

What is the LINDDUN privacy threat modeling methodology?

The LINDDUN privacy threat modeling methodology is a systematic framework developed to address the challenges of ensuring privacy in software systems. Created by experts at KU Leuven, including Dr. Wuyts and Dr. Hovesepyan, LINDDUN stands out for its structured approach to identifying and mitigating privacy threats throughout the development lifecycle of a software system.

What does LINDDUN stand for?

LINDDUN is a mnemonic representing the privacy threat categories it addresses:

- Linkability (L): The risk of an adversary linking two items of interest (e.g., data sets or activities) without necessarily knowing the identities of the individuals involved.

- Identifiability (I): The chance that an adversary can identify a specific individual from a set of data subjects.

- Non-repudiation (N): The inability of a data subject to deny a claim, such as having performed an action or sent a request.

- Detectability (D): The potential for an adversary to detect the existence of a particular item of interest related to a data subject, regardless of the ability to access its content.

- Disclosure of Information (D): The risk that an adversary can access specific data related to an individual.

- Unawareness (U): The situation where data subjects are not informed about the collection, processing, storage, or sharing of their personal data.

- Non-compliance (N): Occurs when the processing, storage, or handling of personal data does not adhere to relevant legislation, regulations, or policies.

These categories encompass a broad spectrum of privacy design issues and differ from traditional security goals like confidentiality, integrity, and availability. LINDDUN focuses on privacy-specific protection goals such as unlinkability, intervenability, and transparency.

Development and purpose

The LINDDUN privacy threat modeling methodology was developed based on the insights gained from applying the STRIDE security threat modeling methodology for security analysis. As Dr. Wuyts explains in the podcast, about twelve years ago, while working on a research project that involved security analysis using STRIDE, the team realized the lack of a similar approach in the field of privacy. This led to the creation of LINDDUN, adapting the principles of STRIDE to address privacy concerns. LINDDUN maintains the same four fundamental questions used in STRIDE (covered in the previous section) but introduces specific privacy knowledge on issues and mitigation strategies.

Why is LINDDUN important?

This methodology is essential in today’s digital landscape, where growing privacy concerns and regulations like GDPR require the implementation of Privacy-by-Design and Privacy-by-Default paradigms in software development. LINDDUN leads users through each step of the threat modeling process, ensuring exhaustive coverage and documentation. It also provides an extensive knowledge base of potential privacy threats, invaluable for both privacy experts and newcomers.

Practical application

Experts commend LINDDUN for its practical and systematic approach. It presents a model-based method that directs analysts to investigate privacy issues and locate their potential emergence in the system model. This method comprises a catalog of privacy threat types, mapping tables, threat trees, a taxonomy of mitigation strategies, and a classification of privacy-enhancing solutions. The design of LINDDUN is compatible with security threat modeling approaches such as STRIDE, enabling parallel execution and enhancing efficiency.

Variants of LINDDUN

LINDDUN comes in multiple flavors to cater to different needs, ranging from lean to in-depth analysis:

- LINDDUN GO: A lean, cross-team approach using a card deck representing common privacy threats, ideal for quick assessments and brainstorming.

- LINDDUN PRO: A more systematic and exhaustive approach focusing on all interactions within a Data Flow Diagram (DFD) to investigate potential privacy threats.

- LINDDUN MAESTRO: An advanced approach leveraging an enriched system description for more precise threat elicitation.

Why use LINDDUN?

Various authorities, including ENISA, ISO 27550 on Privacy Engineering, EDPS, and NIST, widely recognize and acknowledge LINDDUN. Numerous European research projects have utilized it, demonstrating its applicability and effectiveness in real-world scenarios. The methodology aligns with the GDPR’s privacy-by-design principle, aiding organizations in meeting legal and technical requirements for privacy protection. LINDDUN’s systematic methodology not only assists in identifying privacy threats but also in documenting the process and solutions, crucial for demonstrating compliance and accountability.

In summary, LINDDUN provides a comprehensive and adaptable framework for privacy threat modeling, making it a valuable tool for organizations committed to ensuring privacy in their software systems.

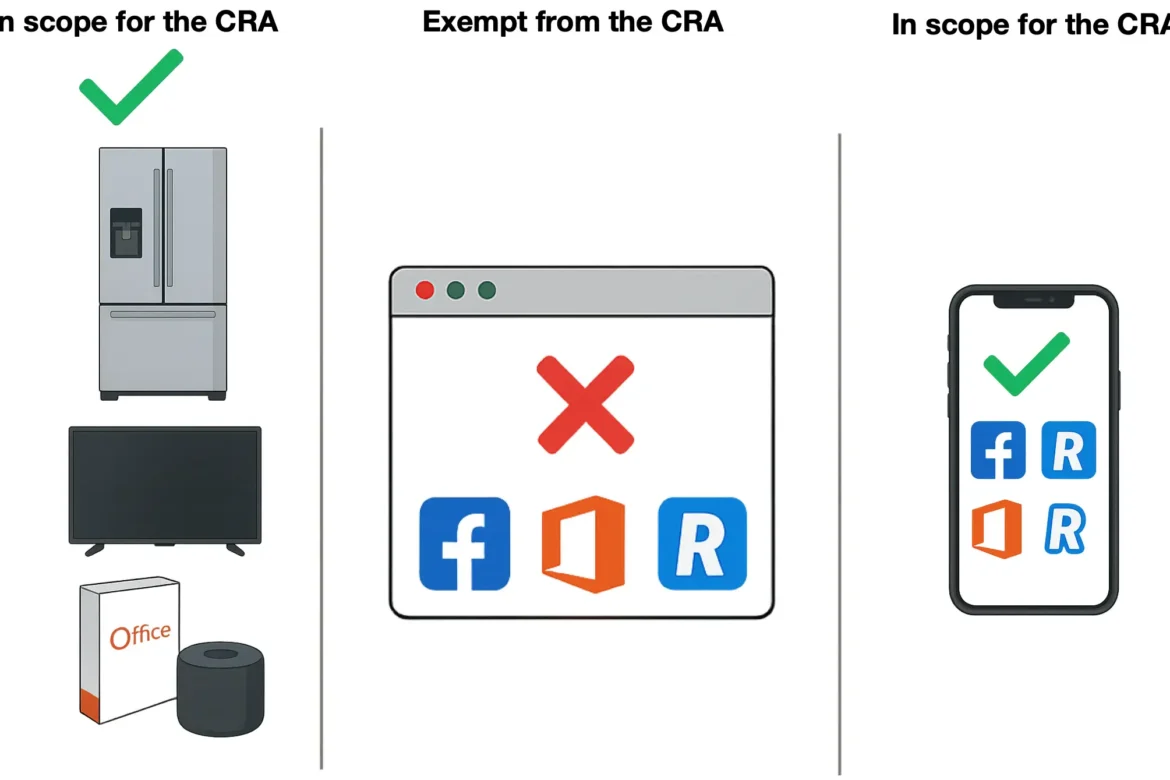

How does privacy threat modeling relate to the European Union’s General Data Privacy Regulation (GDPR)?

In the previous section, we mentioned how privacy threat modeling aids compliance with the European Union’s General Data Privacy Regulation (GDPR). It closely ties to GDPR’s core concepts of ‘privacy by design’ and ‘privacy by default’. While GDPR, primarily legal in nature, sets broad guidelines for data protection, it lacks specific directives for software development, making privacy threat modeling an essential practice. This methodology proactively identifies and mitigates privacy risks, ensuring that systems incorporate privacy at their core. As Dr. Hovsepyan and Dr. Wuyts highlight, privacy threat modeling complements GDPR’s risk-based approach, offering a substantive and technical method beyond mere compliance checklists, thus ensuring authentic privacy protection in software systems.

We have also written a blog about GDPR compliance in software development. If you are interested in reading it, click here.

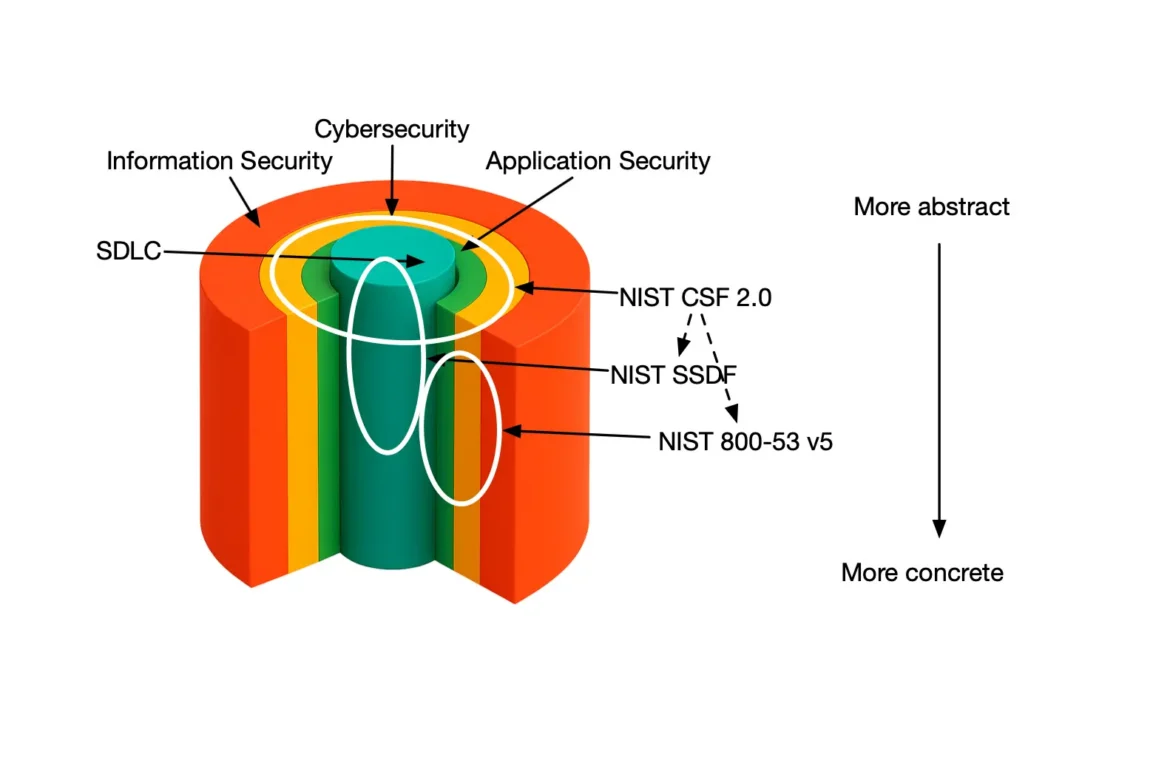

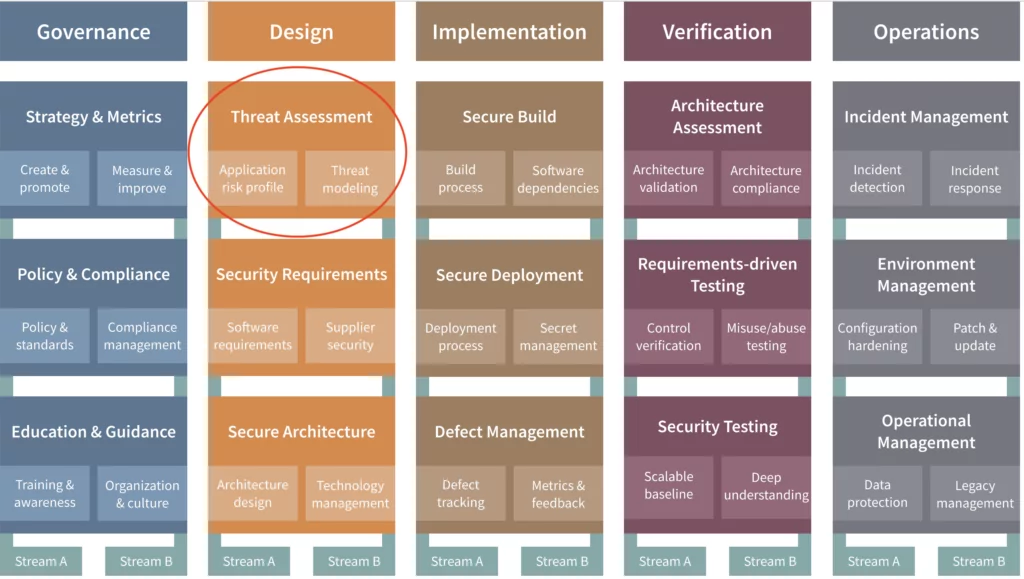

To comply with the GDPR, you will certainly need to consider privacy threat modeling as one of the various activities to maintain the security and privacy of data in an application. To map all these activities and to evaluate, validate, and improve your security posture regarding them, using an application security (AppSec) program like the OWASP Software Assurance Maturity Model (OWASP SAMM) is highly recommended. This is why, in the podcast, we also discussed the role of threat modeling when using the OWASP SAMM AppSec program.

What is the role of threat modeling in OWASP SAMM?

Threat modeling ( both privacy and security) plays a crucial role in the OWASP Software Assurance Maturity Model (SAMM), especially in the Threat Assessment practice of the Design business functions. In SAMM, threat modeling, including privacy aspects, contributes significantly to ensuring software security and user data protection. However, as Dr. Hovsepyan notes, it accounts for only a fraction (10 out of 90) of the total security-related activities that are considered in the programme. Implementing SAMM effectively requires a diverse set of actions to fully assure cybersecurity data privacy in digital products. For this, it often requires collaborations between multiple parties, across different business units or even with external parties that are not in your company. Managing all of these using Excel spreadsheets is not ideal, that is why we developed SAMMY, a free tool to implement OWASP SAMM.

Concluding remarks

In conclusion, as experts Dr. Aram Hovsepyan and Dr. Kim Wuyts emphasize, privacy threat modeling serves as an indispensable component in the realm of data privacy. It aligns with the GDPR’s principles of ‘privacy by design’ and ‘privacy by default’, providing a nuanced and proactive framework to address privacy concerns. The LINDDUN methodology, which developers have shaped using insights from practices like STRIDE, stands out for its efficacy and practicality in privacy threat modeling. Additionally, within the OWASP SAMM framework, threat modeling emerges as a key element among a broader range of activities essential for achieving comprehensive security and privacy assurance. This blog, drawing from the insights of these experts, highlights the critical need to integrate privacy threat modeling into organizational practices, ensuring user data protection and adherence to evolving legal and ethical standards.

Official Resources:

Nicolas Montauban 00:05

Okay, so it’s recording now. So let me just start by introducing myself. So welcome everyone. My name is Nicolas. I’m a Market Analyst here at Codific, work in sales and also in the SEO efforts. I also helped a little bit with the development of the new Attendance Radar app. I’m from Peru and I study at Erasmus University in Rotterdam. Thank you very much for being here. I’m super excited about having the chance to interview both of you guys about privacy threat modeling. So firstly, I just wanted to ask, could you tell us about yourself and your expertise in privacy threat modeling? Maybe Kim can start.

Kim Wuyts 00:50

Sure. So I’m Kim Wuyts. I’m a researcher at the Distrinet group at KU Leuven, and I’ve been working there in the area of security engineering, privacy engineering, and privacy threat modeling for, I have to count, more than 15 years now. I am one of the creators of LINDDUN, a privacy threat modeling approach, and I have been working on extending, updating, maintaining it ever since.

Aram Hovsepyan 01:21

And I am Aram Hovsepyan and I’m the founder and CEO of Codific. Before jumping into this entrepreneurial adventure, I was a researcher at the same place at KU Leuven, which is a world class research lab in the domains of security and privacy. I was working on security and privacy engineering and amongst a couple of other topics, my research focused on security and mainly privacy threat modeling. I was also working on LINDUNN on the more pragmatic side of it, and whether intentionally or not, security and privacy have been baked into Codific’s DNA, and we are very happy about it.

Nicolas Montauban 02:03

Yeah, I definitely can see that. Okay, so next question is, in simple terms, what is privacy threat modeling and why is it important?

Kim Wuyts 02:16

I can start maybe, and Adam, you can do some additions from the field. So threat modeling in general means that you think upfront about the things that can go wrong from a security or a privacy perspective, so you can fix it early in the development lifecycle because, well, it’s proven that it’s more cost efficient to fix it early than to fix it late when it’s already implemented. And so it’s a well-known approach in the security community, but it’s equally useful in the privacy community. I think it’s important to note that privacy is not a synonym for confidentiality, which people think. Privacy is much broader. So next to security, we need also a focus on privacy. We need to think of, do we really need this data? Can we use this data? Can we minimize the information? Can we minimize the processing? And what is all the stuff that can go wrong related to that.

Aram Hovsepyan 03:20

Okay, that’s a great start. Actually, I want to take that to the next level and look at it from the organizational perspective. So basically, threat modeling, again, like Kim said, it’s about finding things that can go wrong and that can have a harmful impact or outcome for your software system or your organization. All that provides a valuable input to risk assessment and risk management. Risk is, or it should be, it absolutely should be a central concept in any organization’s realities when it comes to security because there are millions of things that can go wrong and risk and risk score will help you figure out, okay, which of these am I going to focus on? Just to give a small analogy in human language for people to give the chance to relate a little bit more, last week I was at the OWASP SAMM summit, which was in Boston, and my flight was from Amsterdam and I live in Leuven, which is about 2 hours driving and 3 hours by public transportation to get there. Obviously, like everybody, any human being, I don’t like missing my flight and I had basically two options to get there. One was driving and in order to miss my flight or actually not to miss my flight, situations that could have led me missing my flight are obviously my car breaking down or running out of fuel or having a flat tire. On the other side, also traffic jams. Morning traffic is killing in Belgium and Netherlands, which means that I had to make sure that I leave with some extra hour of margin, for instance, and make sure that my car has fuel and my car is regularly maintained. So breaking down was not really a big concern. The other possibility was taking the public transportation, which is taking a bus to the Leuven station, then taking a train to Antwerp, and then taking the train to Amsterdam and given those chain of things, I had to think like, okay, which bus should I take? It was an early morning flight, by the way. So which bus should I take to make sure that I make the train? Then ideally, you want to take a bus. you want to have a one bus of margin. So you don’t take the bus that makes you to the train, you take the bus before that. Unfortunately, the train from Leuven to Amsterdam was the first train, so there was no margin there. But then if I would miss that train, or if that train was delayed, the train to Amsterdam, there was a second train, which would give me enough time just to make it with some comfortable margin for the flight and this is what I’m telling you now, is threat modeling. In real life, basically, everybody threat models, all the time, probably. But ideally, you should threat model every time you are taking a flight. Make sure that you don’t miss it.

Kim Wuyts 06:18

Yeah. So we do it every day anyways, but without really realizing we’re thinking it through. So it makes so much sense to also do that explicitly when we’re building systems, when we’re building products, to think about the security and the privacy stuff that can go wrong as well. Yeah. So did you end up taking the bus and the train, or?

Aram Hovsepyan 06:37

I took the bus and the train, and it was way before the flight there. I could have taken, like, three trains later and still make it.

Kim Wuyts 06:48

Yeah, but still, better safe than sorry. So it’s a threat modeling habit, right?

Nicolas Montauban 06:52

Yeah, that was a super interesting analogy, actually. I had never thought of it in that way before. But, yeah, it’s good that you arrived quite early because that particular airport has a lot of problems right now, the Schiphol airport. The next question is a little bit more about the process. So can you explain the process of modeling the threats to privacy? So, for example, what are the different steps that usually need to be taken? I understand, obviously this process is very different depending on the organization, on the product, on a whole variety of different things. But in general, what are the different steps that you usually take?

Kim Wuyts 07:29

Sure. Should I? Will you?

Aram Hovsepyan 07:33

Yeah, you can start, Kim.

Kim Wuyts 07:34

Okay. I will continue with Aram’s analogy of getting to the airport. So well, basically, there’s four steps, four questions. They were coined by Adam Schostack, who wrote the Bible on threat modeling. And it’s, what are we working on? What can go wrong? What are we going to do about it? And did we do a good enough job? So the first thing is you visualize what you’re working on. So you need to have a model of the system. In the scenario of going to the airport, it’s kind of visualizing the entire trajectory of getting from Aram’s home to the airport. Then the next step is, what can go wrong? So clearly, he didn’t want to get there late, so he was thinking of all the things that can go wrong, he just explained it, so I’m re explaining his scenario where I had no part in, but, like, the bus running late, the train being canceled, or getting some delay, that’s all the stuff that you can think of up front that can go wrong. Then how will you fix it? How will you mitigate it? Well, you take some additional time. You take a backup train and so on. And the same applies to security and privacy threat modeling. You start with the model of the system and then you go over each of the interactions of the elements there and you think, what can go wrong from a security or from a privacy perspective? The tricky thing is that, well, in this example of the trains, we all know what can happen. Trains run late, get cancelled but from a security and a privacy perspective, it’s less obvious. You need to be an expert. You need to have a lot of experience. The good thing is that there are approaches such as STRIDE for security and LINDDUN for privacy that bring this knowledge into the picture as an approach, as a process. So STRIDE has a lot of information on security issues that can go wrong that are known. LINDDUN does the same from a privacy perspective, by the way, STRIDE and LINDDUN are actually also acronyms for the different security and privacy categories for which they also provide information. So from LINDDUN perspective, it’s linking, identifying, non-repudiation, detecting, data disclosure, unawareness, and non-compliance. So in these categories, that approach provides information on what can go wrong. Then that information, that knowledge, can be used to analyze the specific system and determine all the stuff that can go wrong. Does it apply to my specific system? And then in the next steps, you can further mitigate that. Aram, feel free to.

Aram Hovsepyan 10:37

I think you really covered everything. I just want to throw in some tribe knowledge or some fun facts that a bad threat model is still way better than no threat model. Typically, if you’re a starting threat modeler, first of all, for an organization, you should probably invite an expert because it could be challenging to do it. On the other hand, you could also take various courses, sorry, trainings that are often organized with large security conferences like Black Hat, all OWASP conferences and so on. And yeah, I would say that at the lowest level of expertise, threat modeling, you would just go through the mnemonics like STRIDE and LINDUNN and try to come up with threats based on those. The further you mature, the more advanced strategies you can use and of course, the expertise, an expert will come up with threats just like that. Versus somebody who has no experience in threat modeling, he might not be very quick. It might take him more time.

Kim Wuyts 11:49

Yeah, yeah. It might sound overwhelming, especially if you read the information on how to apply STRIDE or how to apply LINDUNN. And ideally, you spend a lot of time actually analyzing the system, which can be for people getting started like, I don’t have the time, cannot invest that much in it. But as Aram says, even the slightest additional effort you can do, even if you start with just 15 minutes of brainstorming and thinking of security and privacy issues there, it’s already much better than not considering it at all. So build it up and go from tiny efforts to a well integrated threat modeling program or practice.

Aram Hovsepyan 12:39

And by the way, we are mentioning now, you should do it. You should do it. You, in a sense of the team. So that threat modeling should happen in a group where at least your software architects are on board, your security champions, your development team, you can invite a lot of different kind of stakeholders, but those should be definite in there. Your QA could as well participate. Actually, the recent threat modeling exercise we did, our QA came up with quite some interesting threats, which nobody could think of.

Kim Wuyts 13:18

And a side effect of having all those people around the table, which we hear a lot from other companies, is that that first step of threat modeling, knowing what you’re working on, that system model, that joint understanding of the system, is often something that is new to those organizations because all those different profiles, they know bits and pieces, but getting that big picture and getting a mutual understanding, a mutual view on the system, seems to be something that was not there yet. So, in addition to having that great analysis of security and privacy, you get that joint understanding of the system and people are really coming together and discussing it.

Nicolas Montauban 14:02

Yeah, wow, that’s definitely quite an elaborate process. I understand. Obviously, as Aram mentions, for example, the expertise you have obviously plays a big role in it. Just a bit of follow up question before we move on to the next main question. You were mentioning that it’s important to do it as a team. Because then you have multiple people thinking about the possible threats to privacy and in general, more so in the case of big organizations, it’s usually also a good practice to consult, like external, for example, an external consultancy firm that also does privacy threat modeling. Is it usually a good idea to also get other people, maybe that are not from your organization, but from external sources, to also try and do privacy threat modeling in your organization? Or is it usually better to just keep it within the walls of your company?

Kim Wuyts 15:04

Go ahead.

Aram Hovsepyan 15:04

I would say that if you have no experience in threat modeling, you should probably invite an external expert who will then moderate and help you threat model. But in terms of once you have that process set up, once, let’s say, your security champions have mastered threat modeling and are threat modeling experts, I don’t think that an external party will be of much help because they are also not likely to understand your system as good as your own team does.

Kim Wuyts 15:36

Yeah, I agree. I was also thinking of in the first phase, setting it up. It’s useful to have somebody there but the end goal is to do it yourself and have security champions, privacy champions, fill that role there.

Nicolas Montauban 15:52

Yeah, makes sense. Okay, so moving on to the next question, this is, how does threat modeling relate to other security related activities? So, for example, when we’re coming up with the questions, we were thinking also about, for example, the SAMM framework, where it’s basically an idea of how you have different areas of your company and how you can improve the cybersecurity in all these different areas. So how, for example, can privacy threat modeling help do this? Yeah, maybe Aram can start in this question.

Aram Hovsepyan 16:31

Yeah, you mentioned SAMM, so I will piggyback on that. Well, SAMM is a maturity framework, security maturity framework, and security assurance program that allows any organization to, first of all, measure where they are in terms of security posture and then formulate a strategy that consists of small improvements over time and then demonstrate that growth and those improvements. And SAMM covers all areas in the software development lifecycle, including design, where threat modeling largely is situated. So, threat modeling, I would say threat modeling covers a lot of possible security issues or improvements or activities in that design phase. But from SAMM perspective, there are about 90 activities, security related activities, in application security program during the development of an application, of a software application, and threat modeling covers, in its most advanced version, probably ten out of those 90 activities. So it’s a great start, but it’s still about 10% of what you should be doing if you want to be a security champion. Of course, you don’t have to be doing all those 90 activities because you need to start from something I mentioned earlier. What is your risk appetite and what is your risk profile and what is risk? How important are security issues, given your organization and your software system? But I would say that threat modeling should come back in most organizations and most software developments. And I think it really makes a difference between a pro in terms of software firm and team versus amateurs and wannabes. Unfortunately, according to BSIMM Benchmark, which is BSIMM, is an alternative assurance program to SAMM. According to their study, only 16 out of 130 firms out there are doing some form of threat modeling. Not the most advanced, but some form. And I’ve also spoken with some practitioners, which is highly not statistical data, but they also agree that 1% to 10% of software firms and teams are doing threat modeling out there. That’s not great. I don’t know if that answers your question.

Kim Wuyts 19:12

Yeah, I just like to add on to this. You mentioned it’s like one of the, I forgot already how many activities within the framework and the development lifecycle so indeed it’s situated early in that design phase, but it’s just important to highlight once again that it should not just be seen as that one activity in an isolated box. The goal is not to just write a document and say, look, we spent 2 hours or 5 hours or whatever, and this is like the ten most important threats we came up with. The usefulness of threat modeling is then using that information and using that as input for the subsequent phases to really use that and make the system better, more secure, more privacy aware. I think there’s one of the NIST recommendations on, I forgot which one, but it mentions to have threat modeling also used as input for the final testing because when you know what you want to avoid, and you should also, once it’s already implemented, see whether the system actually fulfills that requirement. So yes, it’s probably just one of those activities, but it has an impact on other activities as well, or it should at least.

Aram Hovsepyan 20:34

Actually I wanted to add to that that indeed, threat modeling is not something you do and you give it as a paper to the management. But the outcome of threat modeling should be a list of threats, and then you should definitely document that list in some tracking system or an issue tracking system, for instance. Then you should prioritize some of those threats and keep others maybe in a backlog eventually. Like also, Kim said, you can do more things with those threats. First of all, you can solve them, but you can also design security regression tests, which will then check if that threat is correctly solved and if it’s maybe regressing, whether that’s automatable or not, that doesn’t matter much. You can also have a QA who will have to look at it every tim, a new release is being added.

Nicolas Montauban 21:33

Okay, so from what I understand, privacy threat modeling is very much within the initial phases, right in the design phase, but however, it should be applied still throughout the process, especially when testing the system as well, just to ensure that the threats are actually dealt with. When talking a bit about applying privacy threat modeling. I know you both helped in the development of the LINDDUN privacy threat modeling methodology. I understand it’s a very important methodology, and it was even introduced in the NIST, if I’m not mistaken. So could you maybe explain what it is and what it’s used for and maybe examples of where it’s used in the industry already?

Kim Wuyts 22:21

Sure. So it was created again, I have to count, I think, twelve years ago, and we’ve updated it a couple of times. We’re actually working on an update now, so stay tuned. We will soon release some more information. So the idea is actually, the origin story is we’ve been working on a research project. We were responsible for the security analysis, so we applied STRIDE and then we collaborated with people for the privacy analysis and, well, we realized in the field of privacy, there was no such thing as privacy threat modeling back then. So that made us wonder, like, how can we help here? So we looked at what we like about STRIDE and we updated that for privacy as well. And we’ve been doing that ever since. So the idea is that we stick to the same four questions that we mentioned before, but that we introduced their privacy knowledge on privacy concerns, on privacy issues, on suggestions for privacy mitigations, and we bundled that according to the seven LINDUNN categories, which are linking, identifying and so on. We started with, again in line with STRIDE, with some threat trees that capture all that knowledge, the threat issues, the threat types. But we noticed that, well, as Aram mentioned earlier, people will get started with just brainstorming about the high level categories, and that’s great, but you need a lot of expertise to pull that off properly. So we were looking at, how can we meet in the middle, still get some additional information there, but not overflowing them with all that information in the threat trees, and in a very systematic approach. So a couple of years ago, we introduced LINDDUN go cards, which is a more lean approach to applying basically the same knowledge. I have a deck here, it has some guidance questions to help you think about what is a threat about and does it apply to your specific system again, in line with STRIDE, because for STRIDE, there’s also a Cartech elevation of privilege game that is a more gamified version on how to do that properly. Well, we noticed that there is a need for people to have different shapes and different shapes and sizes of how to apply threat modeling. So that also triggered us now to revise our foundation once more to get the base straight so we can extract a more extensive formal approach, support for a formal approach, to get a more lean approach out of that, but all from the same foundation, so we can better accommodate the different needs of the different privacy threat modeling analysts. So that’s up and coming. So stay tuned. It will hopefully soon be released on the website. Aram, I don’t know if you know.

Aram Hovsepyan 25:50

You were very extensive. I don’t think I have to add anything. Did we talk about the difference between privacy and security?

Kim Wuyts 25:56

So far, no. Not yet.

Aram Hovsepyan 25:59

It’s important to mention that it’s sometimes confusing and many people get confused. Well, me and Kim obviously never. What is security and what is privacy? Well, there is typically no privacy without security but a perfectly secure system could still have threats in terms of privacy. Actually, there is even a category in security and privacy that are contradictory in a way. So having auditability in a system from a security perspective is a great thing. Well, you should have it. On the other hand, auditability leads to issues in privacy. So in privacy, you typically want to have deniability. So being able to say, hey, I didn’t do that. And if you have perfect security and perfect auditing in place, there is no way that you can. Well, there are ways, but then it somehow starts to be in conflict with that category. That’s like the one extreme category, of course, where things contradict each other. But on the other hand, you can again have a perfectly secure system. For instance, if you are a dissident in a dictatorship country or some oppressed country and you want to communicate with an agent in the US or another country, let’s say you could have a perfectly secure system and send perfectly secure messages but if the government knows that you’re sending, just knows the fact that you’re sending a message to another country, which is a democratic country, game over. That should give you an idea of like, that is an example of a privacy issue. While it’s perfectly secure, so nobody can access it, nobody can read what you’re sending, but the fact that they know that you’re sending is an issue. It leaks data. Side channel attack, Kim.

Kim Wuyts 27:57

Yes. Yeah, my mind is popping with stuff I want to add, but let’s see if I can focus. So the first thing is what Aram was mentioning. Like, well, I have this non repudiation and plausible deniability. One is a security property, the other is a privacy property. So we have this kind of conflict here, and the same for anonymity and confidentiality. People feel like this is a problem. So especially when we talk to security people, they’re like, wow, privacy, really? But you make our lives so difficult and we don’t like it and can we please ignore it? But it shouldn’t be the case. I mean, the deniability or the non repudiation and plausible deniability. I still have to come across an example that really is a conflict, even in an online voting situation, where you have a strong need for non repudiation about the fact. Well, at least in Belgium and I think in the Netherlands as well, we have to go voting. So we need to have proof that we voted. So that’s something that we need to have. But on the other hand, nobody should be able to know who we voted for. So we need to have plausible deniability about the actual vote, but non repudiation about the fact that we voted. So within that same action, you can still have non repudiation and plausible deniability, but it’s on different elements of it, so it can perfectly coexist. The same also for, well, yeah, I want strong authentication because I want to hold people accountable, but you also have all kinds of pseudonymous or anonymous credential kind of solutions that will still allow you to at least hold people accountable in case there is a specific issue, but in most cases, do it in a more privacy friendly way. So there’s always, I think so at least, there’s always solutions to do it in a more privacy friendly, privacy aware way. It might require you to rebuild, rethink the system a bit. Maybe you’re focusing too much on that. I want to collect data because data is the new gold or the new oil or whatever, and that’s still a mindset we need to get rid of. But that’s a different discussion, probably. So, yeah, privacy is different from security, but it shouldn’t conflict with security and privacy is important. If you think of you as a user of a system, you as a data subject, then it makes total sense that companies will not use data for different purposes and will act in your best interest. But for the organizations, that mind shift still needs to happen. But fortunately, we have GDPR and other legislation that really forces companies to focus on privacy as well and integrate it properly and by design.

Nicolas Montauban 31:16

Yeah, it’s funny that you mentioned GDPR, because our next question is specifically about that. So obviously, for those that do not know what GDPR is, I’ll just give a brief explanation, but I’m sure you guys can go much more in depth. But GDPR is basically the General Data Protection Regulation, which is basically the set of laws given by the European Union around privacy and security in data, which all organizations that collect data from people in Europe need to follow. Specifically, what I wanted to ask you is, can you explain how privacy threat modeling relates to GDPR and, getting a bit more technical with it, how it relates to the privacy by design and privacy by default paradigms which GDPR wants to incentivize? Maybe, Aram, you can start.

Aram Hovsepyan 32:12

Okay, well, actually, privacy and security, let’s call it just threat modeling. Security or privacy let’s call it privacy and threat modeling. It essentially tackles, it essentially solves those things from GDPR, as you mentioned, privacy by design, privacy by default, as well as a section on the technical measures and the technical countermeasures. Now, unfortunately, GDPR is a legislation written by legal experts who are creating what they do. But no offense, they have not a single clue about software development. I don’t have the impression that they do understand the difference between security and privacy. And just throwing in this nice word, privacy by design probably seemed cool, but it doesn’t mean anything. Privacy by design is a buzzword meant to encourage required thinking about privacy properties before actually developing the software. I would say from there on, there are two approaches. Either you go with a checklist based approach, which is not a bad idea, but again, checklists may become impossible to manage, although you could always start with checklists, or you can actually go and do a privacy threat modeling, which is a mathematical analog for proving by contradiction. So the purpose is to find a contradiction and then, you know, okay, then there is a privacy threat, so this is not okay. Yeah, that’s more or less what I wanted to say, but I’m not sure GDPR works, to be honest. Recently I had a personal experience. I get more and more calls by random people trying to sell me stuff. I always wonder, where the hell do they get my phone number? So I did a Google search and apparently there is this firm called Rocket something. Rocket launch. Rocket space. Well, it’s a firm who sells data, gives it even for free, data of everybody who is leading an organization and they had all my phone numbers across the years that I ever had. I searched more and apparently the Luxembourg data protection authorities have flagged those guys already and have said, yeah, they are in violation of the GDPR, but we’re not going to do anything about it. That’s my personal experience with the legislation. Ideally, though, threat modeling is the thing that you should use to make sure that you’re, I don’t want to say compliant, but to make sure that you play by the rules.

Kim Wuyts 34:51

Yeah, yeah. So Aram mentioned it before. It has like this one sentence saying you need to implement appropriate technical measures, but to determine what are appropriate technical measures, it’s very vague. So that’s where threat modeling is really useful. I do want to add, I don’t know if I remember the quote by heart, but I read somewhere recently that privacy is not a legal obligation that has technical implications but it’s actually a data problem that has legal implications. So the idea is, the goal is to build a secure and a privacy aware system and to force people to do it. Now you have GDPR and other legislations that kind of force you, and if you don’t do that, then there might be a fine or some consequence. I’m an academic, so I can be idealistic, but the whole idea is that an organization should ideally have that general culture of wanting to do it in a secure and privacy friendly way anyways and by doing that, that compliance part is basically kind of covered. That’s much better than just tackling it from the checkbox compliance kind of way where you say, well, okay, let’s see. Privacy, okay, check because I have some consents for newsletters and I updated my privacy policy and look, I found this obscure pet that we implement at the end of our lifecycle, so we have some pseudonymization of some of the data. It’s really implementing it at the core of the system and at the foundation but that being said, well, people are typically still looking for GDPR compliance and indeed threat modeling there is a great step because it’s also risk based, similar to GDPR, which has like a lot of times, I think it was 75 times, I don’t know, I think we counted it once. So it’s really risk oriented, same as threat modeling but then from a technical perspective.

Nicolas Montauban 37:17

Yeah. Thank you. From this, we obviously see organizations are being kind of forced to be more privacy aware and to do privacy threat modeling in order to comply with GDPR, which also asks for the need, I guess, for more people that are capable of doing privacy threat modeling, right?

So our next question is actually a bit related to that. It’s related to more of the career path that is privacy threat modeling. So just as a question would, for example, I be able to become a privacy threat modeler. Is it a real job or is it usually an activity you do within other roles? So, for example, you’re the security officer and you do privacy threat modeling as one of the activities or are there people that actually dedicate themselves? Maybe, I know academically it is the case, but maybe within an organization, are there sometimes people that are 100% just the ones that do privacy threat modeling? In general, what does the career path of someone that wants to do privacy threat modeling look like?

Aram Hovsepyan 38:29

I would say that typically a security champion would take that role. Software architects could also add the threat modeling to their belt. Yeah, everybody can threat model, like we said in the beginning, and it’s absolutely a real job, but I think that it’s like a responsibility that falls within the role of a security champion or a security expert that does other things along with that in terms of where to start.

Aram Hovsepyan 39:07

You could go and do some research work or do a PhD Distrinet and then you’re ready to go.

Kim Wuyts 39:20

Yeah, we are always looking for more people, so if you’re interested, please come join us. Yeah, but basically I think it’s part interest as well. The background ARAM mentioned is important, in addition to the security engineer, a privacy engineer, of course, if you’re thinking about privacy threat modeling, and I kind of lost my train of thoughts here, but yeah, basically anybody can do it.

Kim Wuyts 39:55

You said. Is it a designated role? It depends on the organization. If it’s a big organization, then probably it can be. I know there are some consultancy firms who focus specifically on bringing threat modeling to companies. So of course, obviously if you work there, then you will be a full time threat modeler. But yeah, it depends, to answer as a lawyer.

Nicolas Montauban 40:25

And as you mentioned, for example, in the case of big organizations, usually you would have more designated people to do privacy threat modeling. Probably have much more capabilities when it comes to privacy threat modeling. However, our next question is actually, for example, if you have a young startup, a small company which obviously has much more limited resources, where should you start? If you’re aiming to do privacy threat modeling, maybe you’re a small software company that needs to do it. So where should you start when it comes to privacy threat modeling? And for example, in general, what should your priorities be when it comes to modeling privacy threats?

Aram Hovsepyan 41:06

Well, from my perspective, unless you are developing security and privacy specific products, unfortunately you have to forget about any threat modeling and security. You should survive first. So that’s a bit of a somber view on things for a startup, at least. If you are developing security and privacy specific products, you should just call Codific and we’d be happy to assist you. Joking aside, you should go and do a threat modeling training. Many conferences organize those. Like I mentioned, Black Hat OWASP conferences. It’s a regular event that pops up virtually anywhere on the planet. I would say the biggest names are Adam Shostack and Sebastien Deleersnyder. They are often organizing trainings. I think it’s a one day training. It will give you a jump start and then you can read books. As a startup, you could do it. I would say if you have a limited budget, you don’t really have to call an expert, but then your threat model quality would be not the best but again, it’s better to have a bad threat model than have no threat model at all and not do threat modeling at all. You can also read a lot of books that those guys have published, and there are quite some resources on YouTube if you want to learn more about threat modeling. Yeah, but in terms of security, it’s a good idea to start from threat modeling. To do threat modeling, I have to.

Kim Wuyts 42:49

Add some nuance to the answer because you kind of said ignore privacy. That’s maybe a bit too strongly put.

Aram Hovsepyan 42:57

No, I didn’t say ignore. The problem is you have limited resources and then you need to release a product, and typically you’re not going to focus on anything about security and privacy. That’s the reality. I’m not saying you should.

Kim Wuyts 43:14

Yeah, I think at least ask yourself the question, when you are processing personal data, do I really need it all? Can I minimize it a bit? Because if you think like, well, all the personal data you’re collecting, you’re responsible to keep it safe, to keep it secure, and to not abuse it. So whenever anything goes wrong, when there is a breach, when you share it with too many third parties or whatever, you might be fined, people will sue you. There might be really personal damages there depending on the impact of the information. So it might sound like who will find this tiny startup but at least if you consider do I really need it, can I maybe change my idea a bit so that I can reduce the personal data I collect? That’s like the first step there. Then if you have time, look at LINDUNN. Well, I think it is useful to at least get that basic understanding of security and privacy, that at least you have somebody who is knowledgeable about the basics there and go from there, and you can extend into a full threat link program that is integrated in the development life cycle or in the company structure there.

Aram Hovsepyan 44:49

Yeah, I agree, actually. Thank you for that, Kim. At least thinking about not collecting data that you’re not going to need or not collecting data, even if you think I might need this in the future, is a great idea, then you reduce your liability in a way.

Nicolas Montauban 45:10

So keeping focus on a startup to finish off this question is definitely going to be more for Aram. But then maybe afterwards, Kim, you can also give your opinion on whatever Aram answers. So to finish it off, how do Codific’s applications use privacy threat modeling?

Aram Hovsepyan 45:30

I thought the definition of a startup is a company that loses money. I don’t think we’re a startup.

Aram Hovsepyan 45:41

Good question. So now this is where the realities come in. Like we said so far, threat modeling, you should do it. Before you start developing a system, we kick on threat modeling when we have already started a little bit with the system. So threat modeling you don’t have to do it in a waterfall approach. You can also do it in your agile sprints and this is what we do. So before some of the sprints, we kick in some threat modeling sessions which are scoped, so they are relatively limited, they are boxed in time and then basically we create a diagram, a data flow diagram of a system, and then we’ve opened the floor for finding any issues, any problems, and all the findings go to a list. After that, then we start prioritizing that list and saying, okay, this stuff we’re not going to look at and these are things which we are going to solve now and these are things which we’re going to solve later. On the other hand, I have to also say that many of our team members have a security background. They go to regular security trainings. So everybody is almost all the time running threat modelings in their head. I’m not saying that you should do this. This is not okay but still, having a high security awareness in the team will also make sure that, and this is something we do, will also make sure that they will come with threats just like that, without doing an actual threat modeling exercise. Still, you should start the first one and then get the other one or vice versa. That’s how we do threat modeling. We typically do security threat modeling, privacy, we don’t because in our application we are not in the role of the data controller, so we don’t control what data should be processed. We do use data minimization principles, so we don’t collect anything that’s not necessary. If that is necessary, then it comes from the data controller. So we don’t have any liabilities there.

Kim Wuyts 47:58

Okay, well, obviously I cannot say anything about what Codific does. I do want to compliment the fact that you have such a broad basis that all employees have that security and privacy reflection and awareness. So I think that’s a really important thing to get that security and privacy culture in there. Just a side note, because we have been talking about threat modeling, and I think we mainly focus here on greenfield projects. So when you start with building a system, a product, you integrate threat modeling there. That doesn’t mean that when you already have a system up and running, you’re all lost. Of course, you can also still threat model that one. You have lots more information even there, and you can use the threats you have identified there for the next iteration. There’s this one quote by I think Avi Duglin and Steve Wiricks, who say the sooner the better, but never too late. So it’s always a good time to do threat modeling. Basically, just get started and extend your practices there.

Nicolas Montauban 49:14

Okay. Well, that was all the questions I had before I stopped the recording. I just wanted to give you guys the floor if you want to do any final concluding remarks around privacy threat modeling, why it’s important and why maybe you should think about applying it, if you do have any.

Aram Hovsepyan 49:35

No, I don’t, because I think I said everything I wanted to say already so far.

Kim Wuyts 49:42

Yeah, I think we basically covered it all. So maybe just saying privacy by design is important, and it requires you to integrate privacy in a systematic way. And threat modeling is a great approach to help you do that. So, yeah, have a look and just get started. Everybody can be a threat modeler.

Aram Hovsepyan 50:05

And, it’s going to save you money in the long run.

Nicolas Montauban 50:09

Yeah, I think that’s a good place to end.

Aram Hovsepyan 50:12

That’s a concluding remark. It’s going to save your organization quite some money in the long run.